In an article published in the journal Scientific Reports, researchers from the UK, Ethiopia, and India developed an innovative robotic harvesting system employing deep learning and computer vision techniques to recognize and grasp fruits. They tested their device in indoor and outdoor environments and highlighted that the new technique achieved promising accuracy and efficiency.

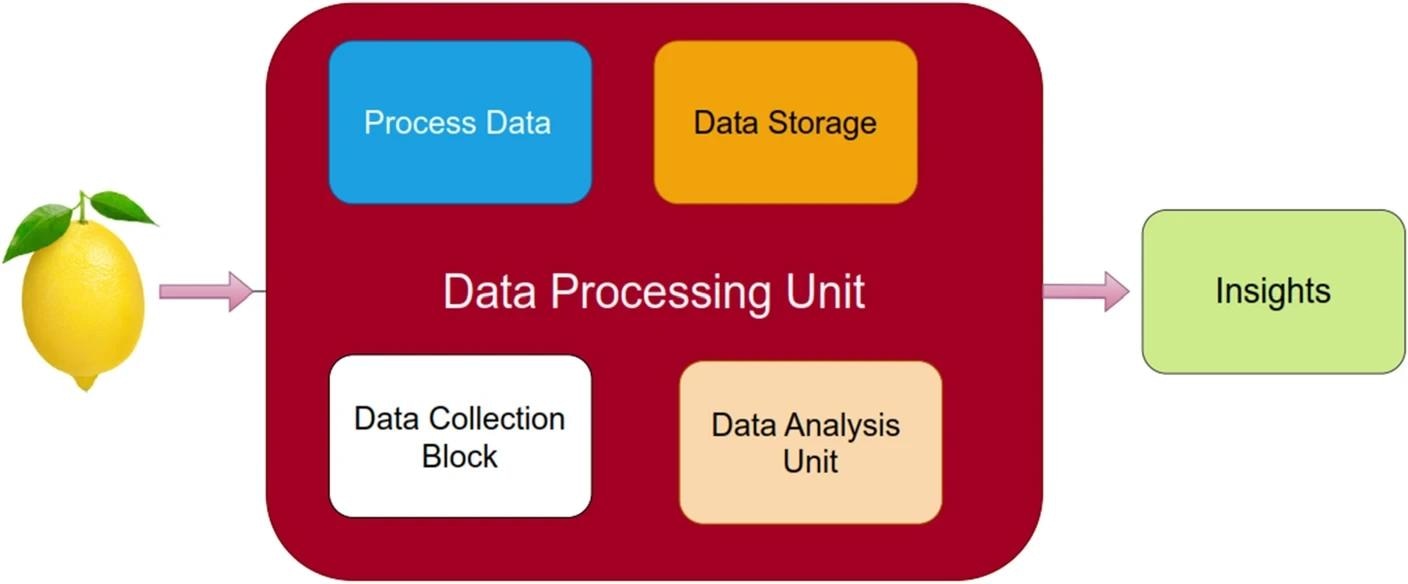

Proposed architecture of intelligent robotics harvesting system process for fruits grasping prediction. Study: https://www.nature.com/articles/s41598-024-52743-8

Proposed architecture of intelligent robotics harvesting system process for fruits grasping prediction. Study: https://www.nature.com/articles/s41598-024-52743-8

Background

Fruit harvesting is a labor-intensive and time-consuming task in agriculture, which often requires skilled workers and manual operations. However, due to the increasing demand for high-quality fruits, the shortage of the labor force, and the rising labor costs, there is a need to develop automated and intelligent fruit harvesting systems that can reduce human intervention and improve productivity and quality.

Previously, several robotic systems have been used for fruit harvesting, but they still face many challenges, such as localization, fruit recognition, shape estimation, segmentation, and grasping pose prediction. These challenges require advanced computer vision and machine learning techniques that can handle complex and dynamic scenarios, such as varying lighting conditions, occlusions, clutter, and noise.

About the Research

In the present paper, the authors designed and implemented a robotic harvesting system for performing fruit recognition and grasping, leveraging deep learning-based image processing and a computer vision-based shape estimation and grasping pose prediction approach.

The developed device comprised a mobile base, a robotic arm, a soft gripper, and a red-green-blue-depth (RGB-D) camera. The mobile base is a vehicle that moves in different directions and carries the robotic arm and the camera. The robotic arm has six degrees of freedom and can manipulate the soft gripper to pick the fruits. The soft gripper is made of flexible materials and can adapt to different fruit shapes and sizes. The RGB-D camera captures the images of the fruits and provides in-depth information.

The image processing approach has two phases: fruit recognition and segmentation, and shape estimation and grasping pose prediction. The fruit recognition and segmentation step used a single-shot detection network, which is a lightweight and fast network that can simultaneously detect and segment multiple fruits in an image. The network is based on a simplified computer vision and uses hue, saturation, and value (HSV) channels to extract the fruit’s features. It outputs the bounding boxes, the class labels, and the binary masks of the fruits.

The shape estimation and grasping pose prediction phase uses a contour-based approach, which uses the depth information and the OpenCV library to generate the contours of the fruits. The contours are used to estimate the shape, size, and orientation of the fruits and predict the optimal grasping position of every fruit. The grasping position is defined as a vector from the geometric center to the visible center of the fruit.

The system integrated the two phases and provided input and output data for robotic arm control. It used the robot operating system (ROS) and Gazebo to communicate between the camera and the computer and to simulate the robotic harvesting process. Moreover, it was evaluated in indoor and outdoor environments, using various types of fruits, including apples, oranges, and tomatoes.

The authors conducted experiments to test the performance of the system. They utilized RGB-D images from laboratory and orchard settings and compared the suggested method with two existing methods, sphere-RANSAC, and sphere-HT, which use point clouds to estimate the shape of the fruits. Additionally, they considered different scenarios, such as normal, noisy, outlier, and dense clutter conditions.

Research Findings

The outcomes showed that the new method outperformed the existing methods in terms of accuracy and robustness. It achieved an F1 score of 0.94, a recall of 0.826, and an accuracy of 0.9 for fruit recognition and segmentation. Moreover, it achieved an intersection over union (IoU) of 0.88, 0.76, and 0.78 for shape estimation and grasping pose prediction in the orchard scenario, using contours with OpenCV, computer vision with pose estimation and HSV, and computer vision approach with HSV, respectively.

The device also showed better resilience against noise, outliers, and clutter and had a lower orientation error of 6.6 degrees for grasping pose prediction. Moreover, it demonstrated a high success rate of 0.75 and a short cycle time of 6.3 seconds for robotic harvesting.

The proposed robotic harvesting system holds promise for precision agriculture, offering potential applications in high-value crop harvesting. Its adaptability to different fruit types and environments and integration possibilities with sensors and devices can enhance productivity and quality in fruit harvesting operations.

Conclusion

In summary, the novel method is effective and efficient for recognizing and grasping fruits using deep learning and computer vision techniques. It can perform fruit recognition, segmentation, shape estimation, and grasping pose prediction using a single-shot detection network and a contour-based approach.

The researchers acknowledged limitations and challenges and suggested that their method can be further improved by enhancing the network architecture and the training data, developing a more robust and adaptive shape estimation and grasping pose prediction method that can handle more complex and irregular fruit shapes and sizes, incorporating more feedback and learning mechanisms to improve the robotic arm control and the soft gripper design, using advanced sensors and devices, such as thermal cameras, multispectral cameras, and hyperspectral cameras, to provide more information and features of the fruits, such as ripeness, quality, and health.