In an article published in the journal Nature Machine Intelligence, researchers from Switzerland and Germany explored the potential of large language models (LLMs) trained on large amounts of text data extracted from the Internet to solve several tasks in chemistry and materials science. They fine-tuned generative pre-trained transformer 3 (GPT-3) to answer chemical questions in human-understandable language with the correct answer and compared its performance with conventional machine learning models for various applications.

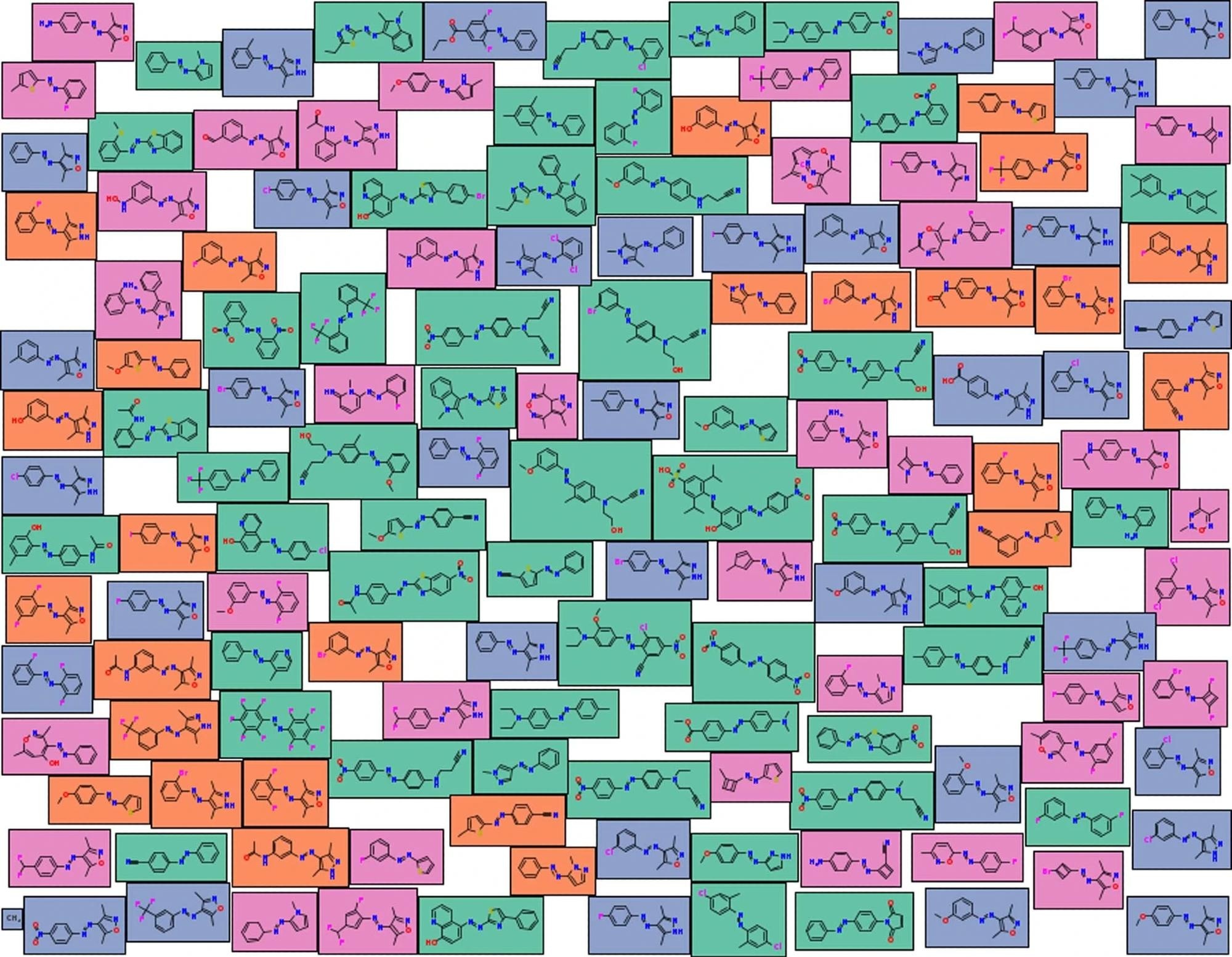

Molecule Cloud generated using the tool reported by Ertl and Rohde. Aquamarine background indicates samples from molecules in the database reported by Griffiths et al. that our model did not generate, coral indicates the molecules our model generated and that are part of Griffiths et al’s database, light steel blue background indicates samples that are generated by our model and that are not part of the database of Griffiths et al. but part of the PubChem database. Pale violet-red background indicates molecules that our model generated but that are part neither of PubChem nor the database of Griffiths et al. Study: https://www.nature.com/articles/s42256-023-00788-1

Molecule Cloud generated using the tool reported by Ertl and Rohde. Aquamarine background indicates samples from molecules in the database reported by Griffiths et al. that our model did not generate, coral indicates the molecules our model generated and that are part of Griffiths et al’s database, light steel blue background indicates samples that are generated by our model and that are not part of the database of Griffiths et al. but part of the PubChem database. Pale violet-red background indicates molecules that our model generated but that are part neither of PubChem nor the database of Griffiths et al. Study: https://www.nature.com/articles/s42256-023-00788-1

Background

Machine learning has transformed many fields and has recently found applications in chemistry and materials science. However, the small datasets commonly found in chemistry pose a challenge for developing effective machine-learning models that require specialized expertise and chemical knowledge for each application.

LLMs are simple and general models that can learn from any text data and generate natural language text as output. These models have shown remarkable capabilities in various natural language processing tasks, such as text summarization, translation, and generation. Some of these models, such as GPT-3, can also solve regression and classification tasks by fine-tuning them to answer questions in natural language.

About the Research

In the present paper, the authors aimed to investigate whether LLMs can answer scientific questions in the field of chemistry and materials science, such as predicting the properties of molecules, materials, and chemical reactions. They used the OpenAI application program interface (API) to access and fine-tune GPT-3 on different datasets spanning the properties of molecules, materials, and chemical reactions. Additionally, they utilized the same API to generate text completions for new questions, as well as inverse design problems, where the model was asked to generate a chemical structure that satisfies a given property. The study employed GPT-3, an LLM with 175 billion parameters, trained on text data sources directly from the Internet.

The researchers fine-tuned GPT-3 on different datasets and tasks by providing sample combinations of question and answer in natural language. For example, to predict the phase of a high-entropy alloy, they gave the model questions like “What is the phase of Sm0.75Y0.25?” and answers like “single phase”. The research then evaluated the performance of the fine-tuned GPT-3 model on various benchmarks. It also compared the fine-tuned LLM with the original GPT-3 model without fine-tuning to assess the impact of the chemical data on the model’s performance.

The study encompassed various tasks. These included classification tasks like predicting the phase of high-entropy alloys or the yield of chemical reactions, regression tasks such as predicting the highest occupied molecular orbital - least unoccupied molecular orbital (HOMO–LUMO) gap of molecules or the Henry coefficient of gas adsorption in porous materials, and inverse design tasks such as generating molecular photoswitches or dispersants with desired properties.

Research Findings

The authors compared the performance of the fine-tuned GPT-3 model with state-of-the-art machine learning models specifically developed for each task, as well as baseline models such as random forest and neural networks. They found that GPT-3 can achieve similar or better accuracy than the conventional machine learning models, especially when the number of training data points is small. For example, for predicting solid-solution formation in high-entropy alloys, GPT-3 reached similar accuracy to the model of ref.24 with 50 data points, while the conventional model needed more than 1,000 data points. For predicting the transition wavelengths of molecular photoswitches, GPT-3 outperformed the Gaussian process regression model of ref.43 with only 92 data points.

The outcomes also showed that GPT-3 can generate novel molecules and materials that are not part of the training set. For example, for the inverse design of molecular photoswitches, GPT-3 generated molecules having transition wavelengths close to the target values, and some of them are not even in the PubChem database of known chemicals. For the inverse design of molecules with large HOMO–LUMO gaps, GPT-3 generated molecules that are far from the training set, and some of them have HOMO–LUMO gaps above 5 eV, which is rare in the QMugs dataset of 665,000 molecules.

Applications

GPT-3 and LLMs can be powerful tools for predictive chemistry and materials science, as they can leverage the vast amount of text data available on the Internet to learn correlations and patterns relevant to chemical problems. Moreover, their ease of use and high performance can impact the fundamental approach to using machine learning in these fields. Instead of developing specialized models for each application, chemists and material scientists can use a pre-trained LLM to bootstrap a project by fine-tuning it on a small dataset of questions-answers combination and then use it to generate predictions or suggestions for novel molecules and materials.

Conclusion

In summary, LLMs like GPT-3 trained on vast amounts of text extracted from the Internet can easily be adapted to solve chemistry and materials science tasks by fine-tuning it on small chemical questions and answers datasets. They can outperform conventional machine learning techniques, especially in small datasets. Furthermore, the fine-tuned GPT-3 model can also do inverse design by simply inverting the questions and generating novel molecules and materials with desired properties.

The research opened up new possibilities for using LLMs as general-purpose tools for predictive chemistry and materials science and raised important questions about the underlying mechanisms and limitations of these models. They suggested that future work could explore how to improve the performance and robustness of these models, interpret and validate their predictions, and integrate them with other sources of chemical knowledge and data.