In a paper published in the journal Mathematics, researchers introduced a novel approach to analyzing block ciphers using deep learning (DL) by developing a mixture differential neural network (MDNN) distinguisher, leveraging residual network (ResNet), which significantly boosted accuracy compared to previous methods.

Model architecture. Image Credit: https://www.mdpi.com/2227-7390/12/9/1401

Model architecture. Image Credit: https://www.mdpi.com/2227-7390/12/9/1401

Through experiments on SIMON32/64, they significantly improved accuracy for an eight-round distinguisher. Unlike traditional methods, this approach is less sensitive to input differences, enhancing robustness. Moreover, extending the model to 11 rounds achieved a practical key recovery attack with 12-round accuracy. This advancement shows promise for improving accuracy in various lightweight block ciphers.

Related Work

Past work has extensively explored the application of machine learning (ML), particularly DL, in various domains, including cryptography. Traditional techniques have been supplemented by innovative approaches like differential neural network distinguishers for block cipher analysis. However, there is still a gap in integrating ML-based key recovery techniques with different forms of cryptanalysis, such as mixture differentials.

Cryptanalysis and DL

SIMON is a family of lightweight block ciphers designed with varying branch and block sizes, utilizing a Feistel structure. Its round function employs basic bitwise operations like AND, XOR, and rotation, making it efficient for constrained platforms. SIMON's parameters vary depending on the specific instance, including block size, key size, and number of rounds.

Differential cryptanalysis explores how plaintext differences propagate to ciphertext differences through round functions. In contrast, mixture differential cryptanalysis focuses on the propagation of differences among four plaintexts, offering a variant approach. ML-based methods like neural networks play a crucial role in key recovery attacks, analyzing output pairs from distinguishers to determine candidate subkeys. ResNet, a pioneering architecture in DL, addresses the challenge of training very deep networks by introducing residual connections, which facilitate gradient flow and enable effective training of complex models like differential distinguishers.

MDNN Development Overview

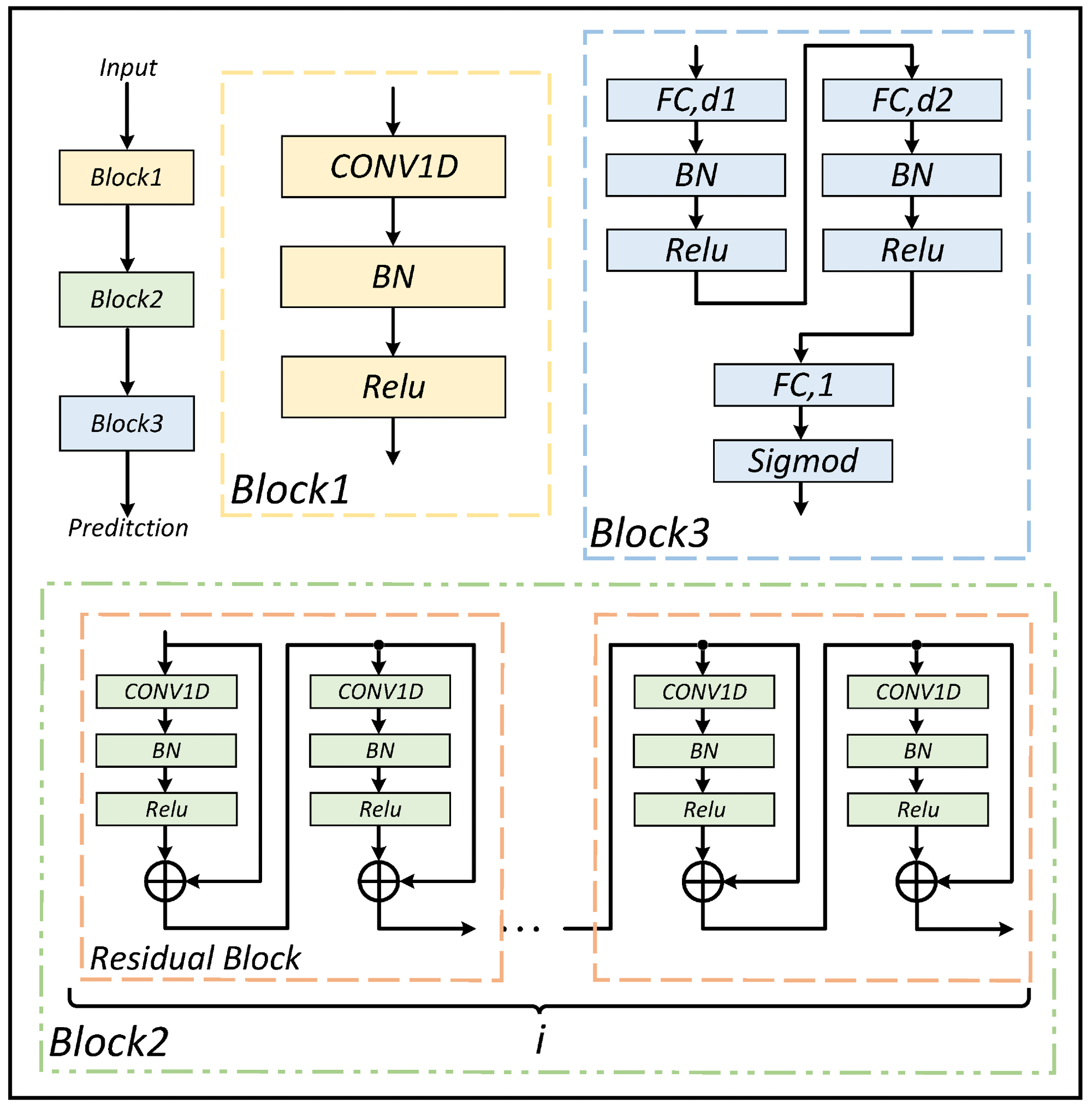

The section details the development of the eight-round MDNN distinguisher, outlining its construction, data generation, model architecture, and training process. The MDNN's core concept combines differential and mixture differential analysis, leveraging neural networks to predict ciphertext pairs' origins based on specified input differences. Through meticulous data generation and a comprehensive neural network architecture, the MDNN distinguisher is trained and refined for improved accuracy in key recovery attacks.

Furthermore, the training methodology involves generating a balanced dataset comprising encrypted data with specified input differences and random plaintexts, enabling rigorous training and validation. The model's performance is meticulously evaluated, showcasing higher accuracy than classical differential neural network distinguishers. Despite the challenges posed by increased encryption rounds in obfuscating differential features, the MDNN demonstrates resilience, offering promising results in cryptographic analysis and key recovery efforts.

Key Recovery Methodology

The team delves into the intricacies of conducting key recovery attacks employing a mixture of differential neural network distinguishers on 12-round SIMON32/64 encryption. A comprehensive key recovery assault was executed utilizing both seven and eight-round mixture differential neural network distinguishers trained specifically for this purpose.

The key recovery attack unfolds in three principal stages: the classical mixture differential distinguisher, differential neural network distinguisher, and key guessing. Throughout the data generation phase, meticulously crafted n pairs of quadruple plaintexts underwent encryption for seven and eight rounds, serving as training data for the mixture differential neural network distinguisher.

A classical mixture differential distinguisher was integrated ahead of the neural network distinguisher, aligning its output difference with the input difference of the neural network to extend the attack to 12 rounds. This setup enabled the decryption of ciphertexts and iteration through potential subkeys, leveraging the neural network's predictions for accurate key recovery.

The analysts augmented the eight-round mixture differential neural network distinguisher by incorporating two rounds of mixture differential transition, thus expanding the scope of the attack to ten rounds. This structural enhancement ensured consistency between the available difference structure and the input difference used for neural network training. Additionally, strategic manipulation of neutral bits within the plaintext amplified the probability of obtaining desired output differences, effectively reducing data complexity while enhancing attack efficacy.

Bayesian optimization was harnessed to pursue candidate subkeys and treat the mixture differential neural network model as a black box function. A systematic approach was formulated to search for candidate subkeys, iteratively refining predictions based on computed wrong key response profiles by leveraging the model's responses to wrong keys. This iterative process culminated in identifying potential subkeys, with stringent criteria ensuring the accuracy and reliability of the recovered keys.

Conclusion

In summary, this paper explored the fusion of mixture differential analysis with ML, specifically training an eight-round MDNN. The MDNN outperformed the traditional differential neural network distinguisher, showing higher prediction accuracy and greater robustness to input differences. Illustrated through a 12-round key recovery attack on SIMON32/64, the MDNN demonstrated its effectiveness.

The computation method for wrong key response and Bayesian optimization for candidate subkey search were detailed. The MDNN achieved higher key recovery accuracy despite increased data and time complexity. Future work would focus on optimizing the model architecture to cover more rounds for complex key recovery attacks.