In a recent article published in the journal Electronics, researchers explored the potential of integrating large language models (LLMs) into digital audio-tactile maps (DATMs) to facilitate self-practice for visually impaired individuals (PVIs). They developed a smartphone-based prototype using LLMs to provide verbal feedback and evaluation, enhancing users' ability to practice and understand digital maps independently.

Study: LLMs Enhance Digital Maps for the Visually Impaired. Image Credit: THICHA SATAPITANON/Shutterstock

Study: LLMs Enhance Digital Maps for the Visually Impaired. Image Credit: THICHA SATAPITANON/Shutterstock

Background

Navigating the world can be challenging for PVIs, who rely heavily on their tactile and auditory senses to understand their surroundings. While various solutions, such as tactile paving, guide volunteers, and guide dogs, exist, PVIs often struggle in complex environments such as tourist attractions or entertainment centers due to the lack of visual information.

Tactile maps, which use embossed patterns to represent spatial information, have been widely adopted to assist PVIs. However, traditional tactile maps often lack the detail and interactivity needed for complex environments. DATMs address this limitation by integrating haptic feedback and audio cues into touchscreen devices, allowing PVIs to explore and interact with virtual representations of their surroundings.

Despite their potential, DATMs require extensive training for PVIs to understand and effectively use the feedback mechanisms. This training necessitates human instructors, which can be a limiting factor due to resource constraints and the need for frequent practice to maintain skills.

About the Research

In this paper, the authors used LLMs, specifically chat generative pre-text transformers (ChatGPT), to enhance the limitations of current DATMs by providing a verbal evaluation of PVIs' perceptions during practice. They hypothesized that LLMs, with their natural language processing and generation capabilities, could replicate the role of a human instructor, offering feedback and guidance to PVIs during self-practice. To test this hypothesis, the researchers developed a smartphone-based DATM application prototype that provided simple floor plans with three elements: entrance, restroom, and user's location.

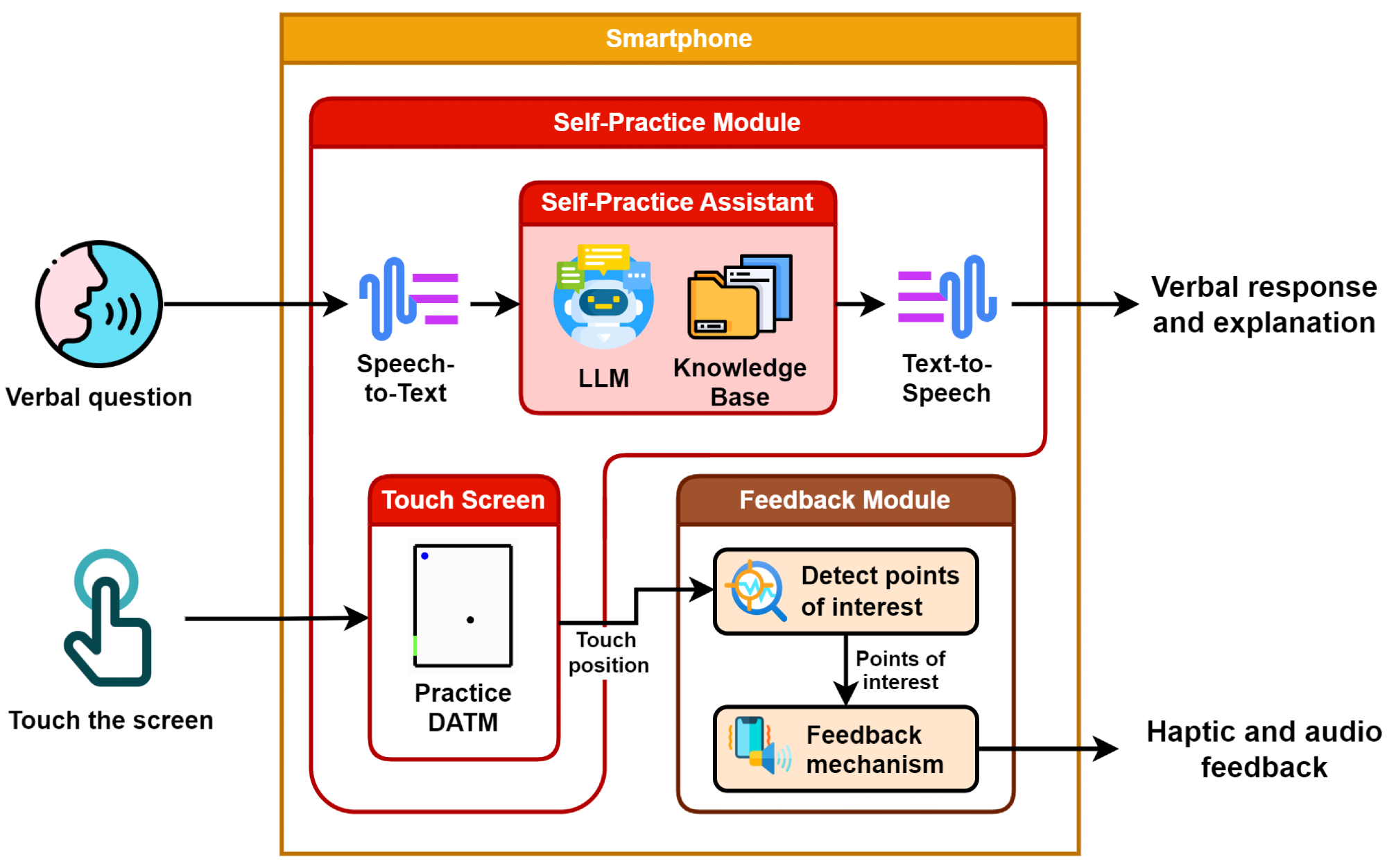

The prototype represented various points of interest (POIs) through vibrations and audio cues using haptic feedback. The self-practice module leveraged an LLM to interpret users' verbal descriptions of the map and provide real-time feedback on their accuracy and understanding.

The study involved blindfolded participants divided into two groups: one group interacted with the LLM-based self-practice assistant, while the other group practiced independently. Participants learned two DATMs, one with a regular shape and another with an irregular shape. The LLM assistant was trained with information about the DATMs, including the encoding of elements and the types of inquiries PVIs might have.

Conceptual design of the LLM-based self-practice module.

Conceptual design of the LLM-based self-practice module.

Research Findings

The outcomes showed that integrating LLMs significantly improved participants' ability to understand and accurately interpret DATMs. Participants who received LLM-based feedback demonstrated higher accuracy in reconstructing the mental maps of the floor plans compared to those who did not receive feedback. The verbal interactions facilitated by the LLM helped participants better comprehend the layout and spatial relationships within the maps.

The LLM's ability to process and respond to natural language queries effectively substitutes for human instructors, offering tailored and context-specific guidance. The experiment highlighted the potential of LLMs to address the shortage of human instructors, enabling PVIs to practice independently and maintain their navigation skills.

Applications

This research has practical applications in assistive technology for people with visual disabilities, particularly in enhancing their ability to navigate and explore new environments independently. Integrating LLMs into DATMs can be especially beneficial in several areas. In education, these tools can help PVIs practice and develop spatial awareness and navigation skills without constant supervision.

For daily living, DATMs can enable PVIs to navigate public spaces, shopping centers, or even their own homes. In mobility training, these models can assist PVIs in learning new routes and environments, promoting greater independence and confidence in travel. Additionally, this approach can be extended to other contexts where verbal feedback is crucial, such as in educational tools for other disabilities or virtual reality applications for training purposes.

Conclusion

The paper summarized that LLMs could significantly enhance the self-practice of DATMs for PVIs, addressing the limited availability of human instructors. The positive results from the experimental prototype suggested that further development and refinement of LLM-based feedback systems could lead to broader adoption and improved accessibility of DATMs in practical applications.

Future work could explore more complex spatial environments and refine feedback mechanisms to provide more detailed and context-sensitive guidance. Additionally, integrating multimodal feedback, such as auditory, tactile, and visual cues, could further enhance the effectiveness of DATMs. The study emphasized the need for ongoing innovation in assistive technologies to support the independence and quality of life for visually impaired individuals.

Journal reference:

- Tran, C, M., et, al. Enabling Self-Practice of Digital Audio-Tactile Maps for Visually Impaired People by Large Language Models. Electronics 2024, 13, 2395. DOI: 10.3390/electronics13122395, https://www.mdpi.com/2079-9292/13/12/2395