A recent article posted on the MIT News website introduced a new approach developed by researchers from MIT and other institutions to enhance the safety and stability of robots controlled by complex neural networks (NNs). This framework addresses the limitations of traditional methods by enabling the synthesis and verification of NN controllers with Lyapunov stability guarantees, even in output feedback control scenarios.

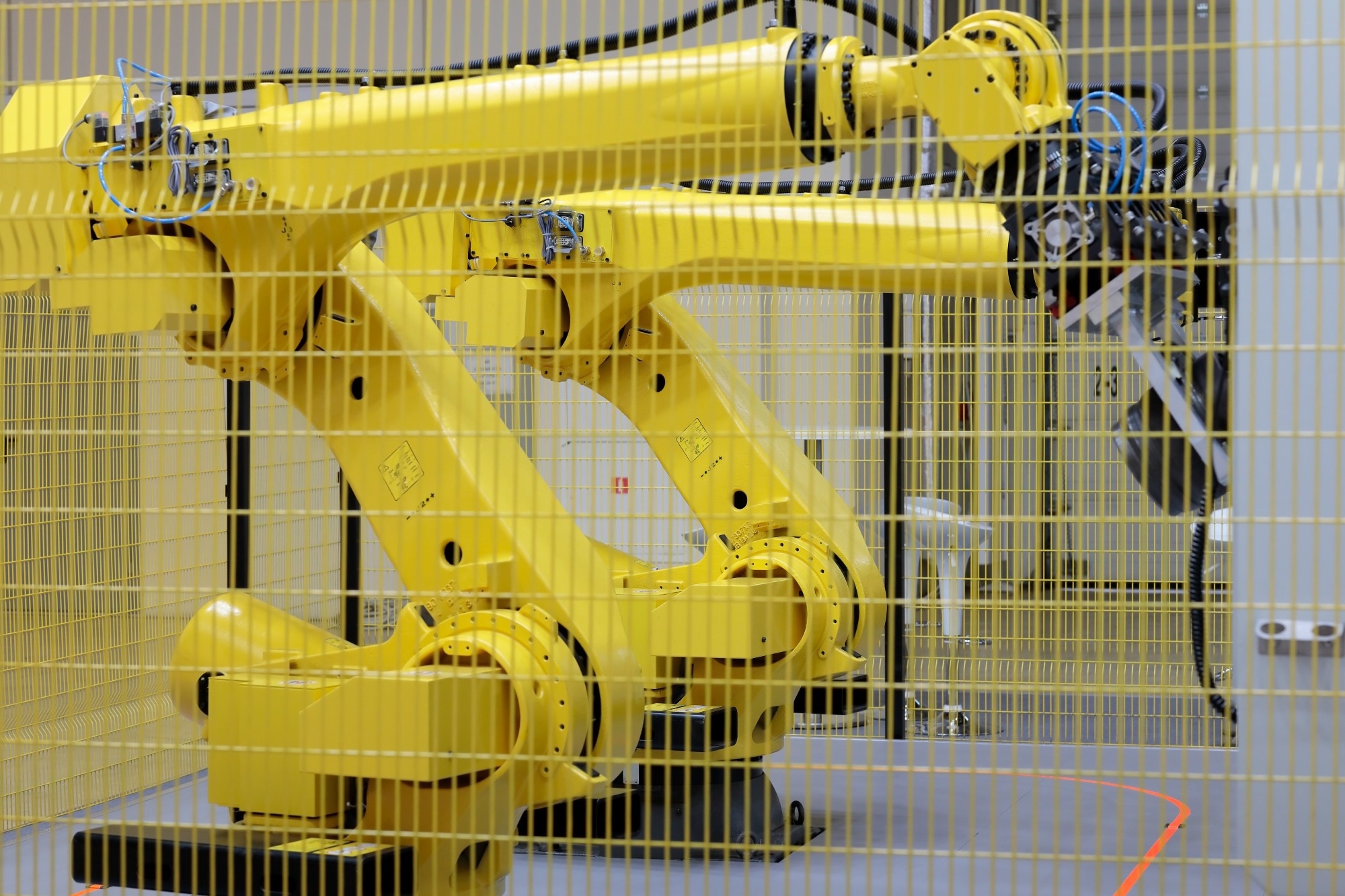

Study: NN Framework Secures Robot Stability with Lyapunov Control. Image Credit: genkur/Shutterstock.com

Study: NN Framework Secures Robot Stability with Lyapunov Control. Image Credit: genkur/Shutterstock.com

Background

Integrating NNs into control systems has transformed robot and autonomous machine design, offering adaptability and efficiency. However, the complexity of NNs poses challenges for ensuring safety and stability. Traditionally, Lyapunov functions have been used to verify system stability by proving a consistent decrease in their value along the system's paths. Applying Lyapunov functions to NN-controlled systems is difficult due to their complexity. Existing methods for verifying Lyapunov conditions often struggle to handle complex machines, limiting the use of NN controllers in safety-critical applications.

About the Research

In this study, the authors aimed to overcome the limitations of synthesizing and verifying Lyapunov-stable NN controllers for general nonlinear dynamical systems with both state and output feedback. They addressed the challenges of existing techniques, which rely on resource-intensive solvers like satisfiability modulo theories (SMT), mixed-integer programming (MIP), or semidefinite programming (SDP), and impose overly restrictive Lyapunov derivative constraints across an explicitly defined region.

The proposed framework introduces a novel formulation that defines a larger certifiable region of attraction (ROA) and removes unnecessary constraints on the Lyapunov time derivative outside the ROA. This approach leverages advancements in NN verification, specifically the α, β-CROWN verifier, which excels in robust verification of larger computer vision NNs and safety verification of NN controllers. The verifier’s ability to exploit network structure and its graphic processing unit (GPU) friendliness enables efficient verification/testing of large networks and fast evaluation of numerous subproblems.

Research Findings

The researchers demonstrated the effectiveness of their formulation through experiments in a simulated environment, successfully guiding a two-dimensional (2D) quadrotor drone equipped with lidar sensors to a stable hover position using limited environmental information. This success was further validated through additional tests involving a simulated inverted pendulum and a path-tracking vehicle, highlighting the algorithm's ability to ensure stable operation under various conditions.

These trials represent a significant advancement over previous NN verification methods, particularly with the inclusion of sensor models. The algorithm's capability to handle sensor data and account for potential disruptions is crucial for developing more robust and reliable robotic controllers.

The results showed that the proposed framework consistently leads to larger ROAs in comparison to state-of-the-art approaches. Notably, the study achieved verified NN controllers and observers for 2D quadrotor and pendulum with output feedback control for the first time. This breakthrough expands the applicability of Lyapunov-stable NN control to real-world scenarios where full-state information is not available.

Applications

This research has significant implications for developing safe and reliable control systems across various domains. In robotics, synthesizing Lyapunov-stable NN controllers with output feedback enables operation in complex, dynamic environments where sensor data is the primary information source. In autonomous vehicles, ensuring stability is crucial for safety, and the proposed framework can help develop robust and verifiable control systems.

In aerospace, Lyapunov-stable NN controllers can enhance the safety and reliability of aircraft and spacecraft control systems, especially in challenging scenarios with limited state information. Additionally, this approach can enable the safe and efficient operation of drones for tasks like delivery, mapping, and surveillance by providing stability guarantees for their control systems.

Conclusion

In summary, the novel framework for Lyapunov-stable neural control proved effective in synthesizing and verifying NN controllers and observers, even for output feedback control scenarios. The research introduced important advancements, such as a new verification formulation, cost-effective adversarial attacks for training, and scalable NN verification techniques. These improvements in verification runtime and certified ROA size highlighted the framework’s potential for the safe and reliable deployment of NN-based controllers in real-world applications.

Future work should explore the framework’s scalability to higher-dimensional systems with more complex observation functions, such as images or point clouds. This would further expand Lyapunov-stable NN control, enabling the development of more sophisticated and reliable autonomous systems.

The authors also suggested extending the method to optimization problems, aiming to minimize the time and distance a robot needs to complete a task while remaining stable. Applying this technique to humanoids and other real-world machines, where robots must remain stable while interacting with their surroundings, could contribute to more robust and adaptable autonomous systems capable of navigating complex environments with greater safety and efficiency.