In a paper published in the journal Scientific Reports, researchers have improved image recognition (IR) technology by enhancing dense convolutional networks with gradient quantization (GQ). It enables independent parameter updates and reduces communication time and data volume.

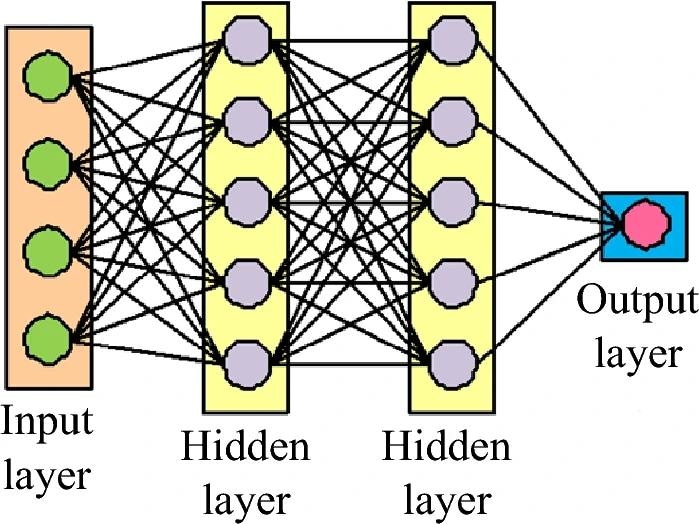

Schematic diagram of CNN structure. Image Credit: https://www.nature.com/articles/s41598-024-58421-z

Schematic diagram of CNN structure. Image Credit: https://www.nature.com/articles/s41598-024-58421-z

This strategy maintains recognition accuracy while boosting model parameter efficiency, achieving superior accuracy to models like EfficientNet by adjusting learning rates and deepening network layers. The parallel acceleration algorithm outperforms traditional methods, ensuring fast training and overcoming communication bottlenecks. Overall, their model enhances the accuracy and speed of IR, expanding its applications in computer vision.

Background

Past work in IR has seen significant advancements driven by the proliferation of digital images and the rising demand for artificial intelligence. IR is vital in computer vision, enabling automation across industries and ensuring precise organization and analysis of visual data. Its accuracy surpasses manual methods, fostering efficiency in diverse applications. Technologies like supervised learning, unsupervised learning, and self-supervised learning, alongside algorithms such as Bayes, decision trees (DT), support vector machines (SVM), and neural networks, have been widely utilized.

DenseNet With GQ

The research focuses on designing an accurate IR model by leveraging DenseNet architecture and GQ. The study aims to extract effective features from image data while minimizing training costs and recognizing the increasing complexity of IR applications. It enhances the feature reuse method of DenseNet and optimizes the parallel training mode of synchronous data using GQ to compress communication data, thereby accelerating algorithm training speed.

Initially, the study adopts a deep neural network (DNN) model, which can automatically learn target features and process high-dimensional data. Convolutional neural networks (CNNs) are prominently used for IR due to their superior feature extraction and classification capabilities. DenseNet is a neural network architecture with dense connectivity chosen for its efficient feature reuse and simplified structure, which mitigates issues like gradient vanishing. Its structure includes dense connection modules, bottleneck layers, conversion layers, and classifiers.

The study aims to optimize the efficiency of DenseNet by adjusting the growth rate and network width. However, challenges like reduced model parameter efficiency and excessive memory usage remain. The analysis proposes two improvements to overcome these issues: reducing the scale of DenseNet and randomly discarding feature maps during feature reuse.

The study enhances the synchronous data parallel (SDP) algorithm on the parallel training front by introducing GQ to reduce communication bottlenecks. The improved algorithm facilitates parallel computation and communication by independently updating layer parameters. Through gradient quantization, communication data volume is reduced, accelerating training speed.

Additionally, the study introduces a parameter averaging interval to enhance model performance and convergence further. The study integrates DenseNet architecture with GQ to design an accurate IR model. The study aims to achieve efficient feature extraction and accelerated training speed, addressing the evolving demands of complex IR applications by optimizing feature reuse in DenseNet and improving the SDP algorithm with GQ.

Enhancing DenseNet IR Performance

The testing experiment aimed to assess the performance of the improved DenseNet IR model across various depths and evaluate the impact of network depth on recognition accuracy. The study analyzed three DenseNet variants—DenseNet-50, DenseNet-100, and DenseNet-200—each featuring unique architectural configurations. These models were subjected to comprehensive testing to examine their recognition effectiveness and computational efficiency, employing the CIFAR-10 dataset for training and evaluation.

The experimental setup involved training DenseNet models on the CIFAR-10 dataset and standardized parameters such as batch size, learning rate, and normalization preprocessing across experiments. Training sessions were conducted iteratively, with periodic adjustments to the learning rate to optimize model convergence. The recognition accuracy and loss curves of the DenseNet models at different depths were meticulously analyzed, highlighting the influence of learning rate adjustments and network depth on model performance.

Evaluation metrics such as receiver operating characteristic (ROC) curves and area under curve (AUC) values were utilized to assess the performance of the model. The DenseNet-200 model exhibited superior performance compared to other models, demonstrating higher accuracy and AUC values. Comparisons with alternative image classification models, such as visual geometry group (VGG) and EfficientNet, highlighted the robustness and accuracy of the DenseNet-100 model across various datasets.

Performance testing extended to evaluating the improved SDP algorithm, focusing on acceleration ratios and training times across DenseNet models. The analysis revealed the efficacy of the GQ improvement method in enhancing computational efficiency, which is particularly evident in deeper networks. Despite encountering communication bottlenecks in certain scenarios, the GQ-based data parallelism algorithm significantly improved computational time and processing efficiency.

The results underscore the effectiveness of the DenseNet IR model, particularly in scenarios involving deep network architectures and large-scale image datasets. By optimizing model parameters and leveraging innovative algorithms such as GQ-based parallel processing, the study contributes to advancements in IR technology, offering robust and efficient solutions for various real-world applications.

Conclusion

The research devised an accurate DenseNet-based IR model, improving feature multiplexing and parallel computing efficiency. Experimental findings showcased stable accuracy across different DenseNet variants, with deeper networks yielding enhanced recognition. DenseNet-100 surpassed VGG and EfficientNet models in accuracy. The GQ-based parallel acceleration algorithm notably boosted computational efficiency. Future studies should explore complex parameter server architectures for further speed enhancement, enriching IR technology.