A critical flaw in existing diffusion models has been fixed, enabling single-step depth estimation with unmatched speed and accuracy. Researchers also demonstrated that fine-tuning Stable Diffusion achieves competitive results, revolutionizing the field of depth prediction.

Research: Fine-Tuning Image-Conditional Diffusion Models is Easier than You Think

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

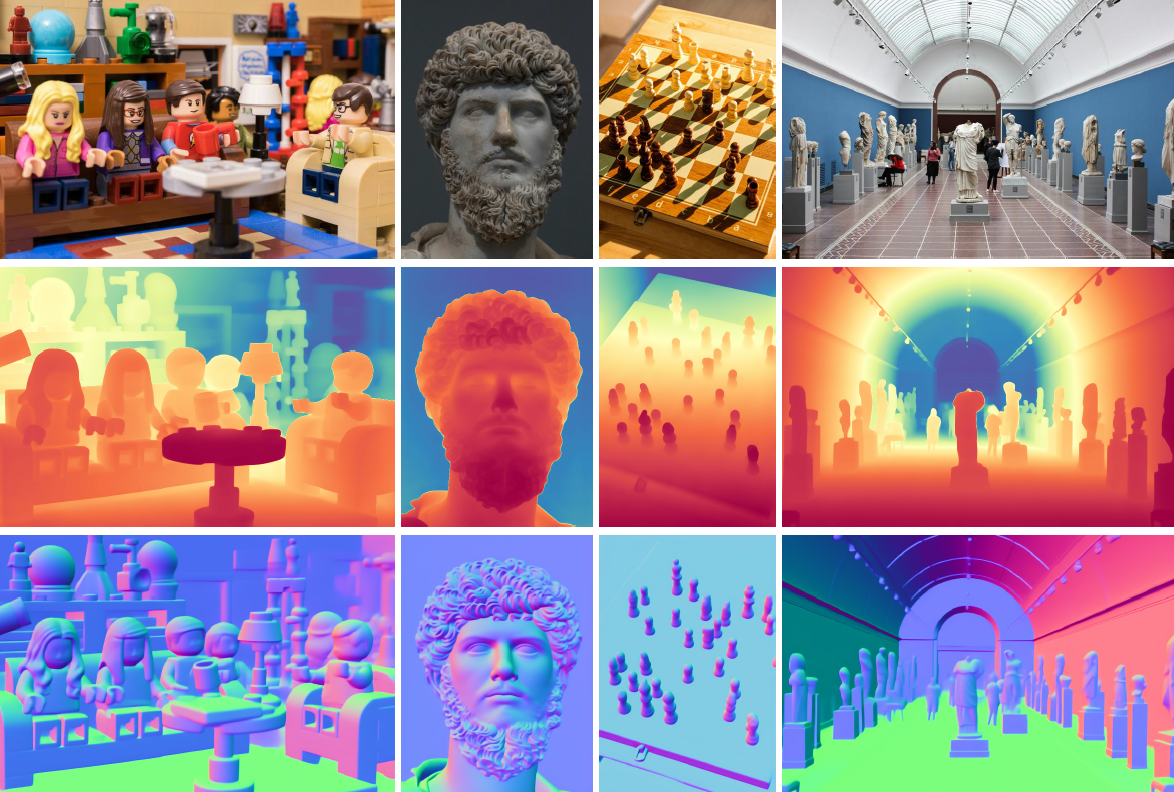

In an article recently submitted to the arXiv preprint* server, researchers in Germany and the Netherlands addressed the optimization of large diffusion models for monocular depth estimation. This task previously faced challenges due to computational inefficiency in multi-step inference. The paper identified a critical flaw in the Denoising Diffusion Implicit Models (DDIM) scheduler, specifically a mismatch between the noise level and timestep encoding, which caused the model to generate nonsensical predictions during single-step inference. The authors demonstrated that a corrected model achieved performance comparable to state-of-the-art depth estimation models while being over 200 times faster.

Additionally, the paper presented a fine-tuning approach using task-specific losses to further improve the model's performance on depth and normal estimation tasks. The fine-tuning process also worked with Stable Diffusion, directly adapting it to depth estimation and achieving results comparable to the optimized Marigold model, challenging prior conclusions about the need for complex architectures. Surprisingly, the fine-tuning protocol also enhanced stable diffusion, matching the performance of leading diffusion-based models.

Background

Monocular depth estimation is a critical task in various applications, such as scene reconstruction, robotic navigation, and video editing. However, this task is challenging due to the inherent ambiguity of depth perception from a single image, which requires the incorporation of strong semantic priors.

Recent research has adapted large diffusion models for this task by framing depth estimation as a conditional image generation task. These models have demonstrated high performance but faced significant speed limitations due to the computationally expensive multi-step inference processes.

Previous works, such as Marigold and its follow-ups, have shown promising results in terms of depth and surface normal estimation using diffusion models. However, their slow inference times have hindered broader practical applications. While some attempts, like DepthFM, have aimed to reduce the computational burden, the issue of efficiency has remained largely unresolved.

This paper addressed a critical scheduling flaw in the DDIM inference pipeline of models like Marigold, which had led to unnecessary inefficiency. The flawed scheduler caused misalignment between the timestep encoding and the noise level, resulting in nonsensical depth predictions during single-step inference. By fixing this flaw using a "trailing" setting that correctly aligns the noise and timestep, the authors demonstrated that single-step inference could achieve performance comparable to multi-step processes while being over 200 times faster. Additionally, the paper explored task-specific fine-tuning, showing that it outperformed more complex architectures, filling the gaps left by previous methods.

Depth Estimation with Conditional Latent Diffusion

The researchers discussed conditional latent diffusion models (LDM), specifically focusing on how the Marigold model utilized these for depth estimation. LDMs operated in the latent space of another model, such as a variational autoencoder (VAE), which helped compress inputs and reconstruct them with better accuracy.

In conditional diffusion models, both the forward and reverse processes were conditioned on an additional input, like an image, to denoise and refine the output. Marigold leveraged LDMs to perform depth estimation by conditioning on images and adapting the Stable Diffusion v2 architecture for training.

The goal was to predict a depth map through a process incorporating noise to simulate the depth distribution. However, the DDIM scheduler in Marigold’s original setup introduced a flaw in the single-step inference, as the noise level did not match the expected timestep encoding. The model produced much more accurate single-step predictions by fixing this flaw using a trailing setting that aligned the noise and timestep. This fix was critical for enabling fast, accurate single-step predictions and highlighted the potential for diffusion models to operate at high speed without sacrificing accuracy.

Fine-tuning and Experiments

The researchers explored the limitations and improvements of diffusion-based depth estimation models. While producing detailed outputs, these models often displayed artifacts like blurring and over-sharpening due to the diffusion training objective, which was designed for denoising rather than the specific task of depth prediction.

To address this, an end-to-end fine-tuning approach was applied, allowing direct refinement of the model for depth prediction tasks. The fine-tuning process involved modifications such as fixing the timestep and replacing noise with a mean value, enabling the model to focus on single-step predictions. This approach was successfully applied to both Marigold and directly to Stable Diffusion, showing that even simpler models could achieve state-of-the-art results.

The evaluation was conducted using benchmark datasets like NYUv2, ScanNet, and KITTI, assessing both depth estimation and surface normal prediction. The fine-tuned models demonstrated significant improvements, outperforming baseline models like Marigold in both indoor and outdoor environments. The study also experimented with different noise types during fine-tuning and found that using zero noise yielded slightly better results.

Compared with state-of-the-art methods, the fine-tuned models achieved competitive results, particularly excelling in surface normal estimation. The results suggested that deterministic feedforward models, such as fine-tuned versions of Stable Diffusion, could produce high-quality depth maps with minimal computational overhead, opening new avenues for diffusion-based models.

Conclusion

In conclusion, the researchers successfully optimized large diffusion models for monocular depth estimation by addressing a critical flaw in the inference pipeline. This enabled over 200 times faster performance while maintaining accuracy comparable to state-of-the-art methods.

The introduction of an end-to-end fine-tuning approach not only improved depth and normal estimation but also enhanced stable diffusion outcomes. The study’s results challenge previous assumptions, showing that task-specific fine-tuning can outperform more complex, multi-step processes. These advancements suggested that further refinements in diffusion models could yield even more reliable results for geometric tasks, paving the way for exciting future research in this area. The findings indicated a significant leap in efficiency and applicability for monocular depth estimation technologies.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Garcia, G. M., Zeid, K. A., Schmidt, C., de Geus, Daan, Hermans, A., & Leibe, B. (2024). Fine-Tuning Image-Conditional Diffusion Models is Easier than You Think. ArXiv.org. DOI: 10.48550/arXiv.2409.11355, https://arxiv.org/abs/2409.11355