By combining automation with ethics, researchers are pioneering an AI decision-making framework that outperforms humans in disaster scenarios, bringing speed, safety, and fairness to crisis management.

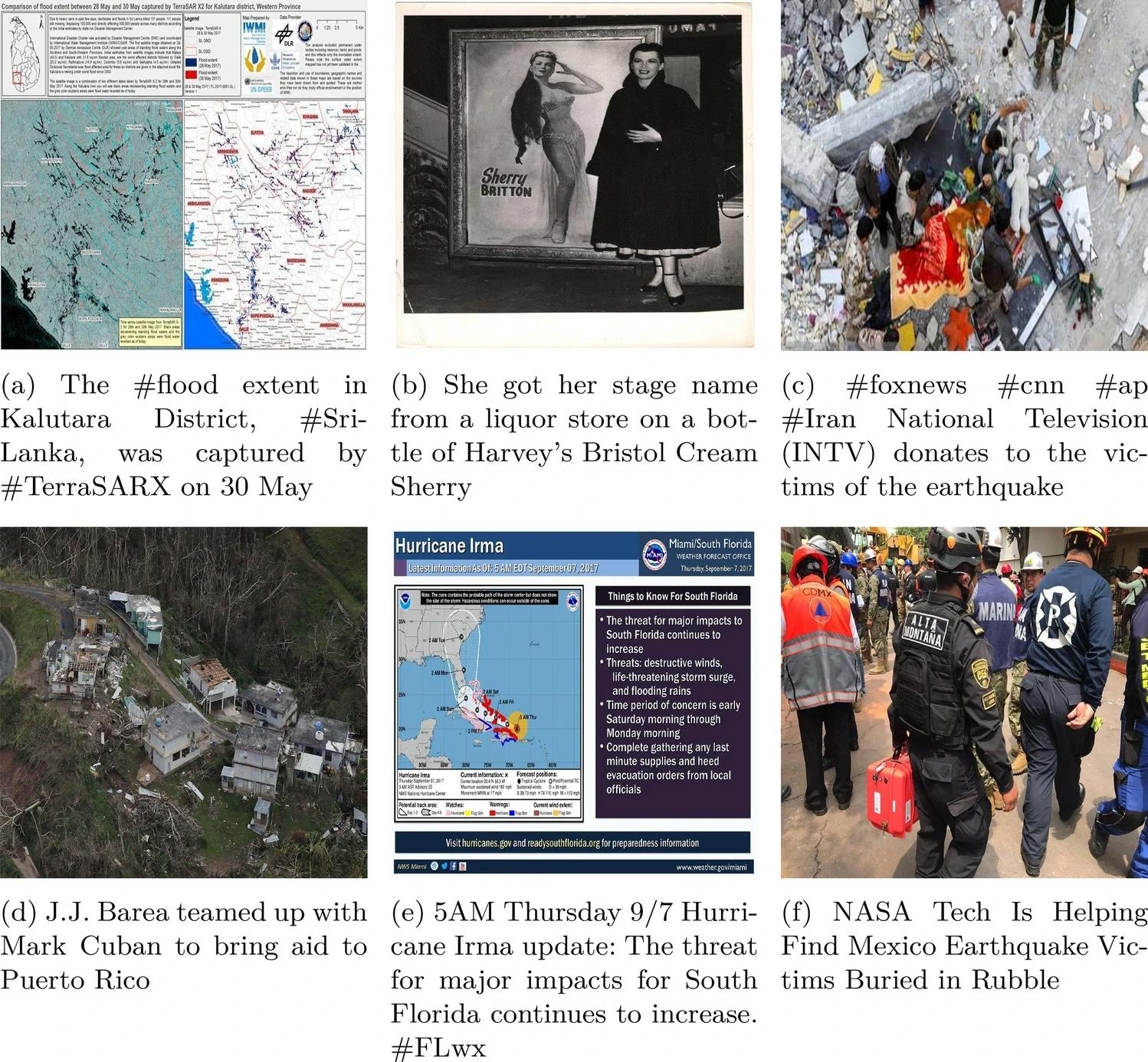

Example data from the CrisisMMD dataset, used to train Enabler agents across Scenario Levels 1, 2, and 3 corresponding to the following data categories: (a) Informative, (b) Not-informative, (c) Affected individuals, (d) Infrastructure/utility damage, (e) Other relevant information, and (f) Rescue/volunteering efforts.

AI in Disaster Response

In an unfolding disaster situation, quick decisions must be made on how to respond and where to direct resources to preserve life and aid recovery efforts. With the rapid development of AI, uncrewed aerial vehicles (UAVs) and satellite imagery, initial efforts have already been made to apply AI to disaster situations, giving quick insights to response teams and autonomously making decisions on relief operations. But whilst AI may speed up processes, there is a risk that errors or bias could have severe consequences.

Implementing Ethical AI Use

Now, a research team led by Cranfield University is addressing the challenge of balancing the advantages of automation with the need to ensure safety, fairness, and ethical use by developing a structured AI decision-making framework. The proposed framework in the study has demonstrated more consistent and accurate decisions than human operators and conventional AI, with 39% higher accuracy than humans across various scenarios.

"Bringing AI into disaster response is not just about creating smarter algorithms," said Argyrios Zolotas, Professor of Autonomous Systems & Control at Cranfield University. "It's about helping to facilitate faster, safer, and more resilient decision-making when lives and critical infrastructure are at risk. It's vital that we go forward in a responsible way to ensure that AI use is ethical, transparent and provides reliable outcomes. Our work in this study gives a valuable view of one way this can be achieved."

Key Research Outcomes

The researchers focused on three outputs:

- Designing a novel framework for autonomous decision-making in safety-critical scenarios, giving a foundation for responsible AI applications in disaster management.

- Developing an AI agent that uses this framework to enhance its choices during a crisis.

- Validating the AI's effectiveness through a human evaluation study, showing its potential to support human operators.

The structured AI framework proposed in this study demonstrated a 60% greater stability in consistently accurate decisions across multiple scenarios, providing more predictable outcomes than systems that rely on human judgment. The proposed framework could pave the way for more responsible and effective AI in real-world emergencies.

Publication

The paper Structured Decision Making in Disaster Management is published in the journal Nature Scientific Reports.

Research Collaboration

The research was undertaken as part of an MSc Applied AI project by Julian Gerald Dcruz, supervised by Dr Miguel Arana-Catania and Professor Argyrios Zolotas of Cranfield University's Centre for Assured and Connected Autonomy, in collaboration with Niall Greenwood of Golden Gate University.

Source:

Journal reference: