The Artificial Intelligence chatbot, ChatGPT, appeared to improvise ideas and make mistakes, much like a student, in a study that rebooted a 2,400-year-old mathematical challenge.

The experiment, conducted by two education researchers, asked the chatbot to solve a version of the "doubling the square" problem, a lesson described by Plato in approximately 385 BCE, and the paper suggests, "perhaps the earliest documented experiment in mathematics education." The puzzle sparked centuries of debate about whether knowledge is latent within us, waiting to be 'retrieved', or something that we 'generate' through lived experience and encounters.

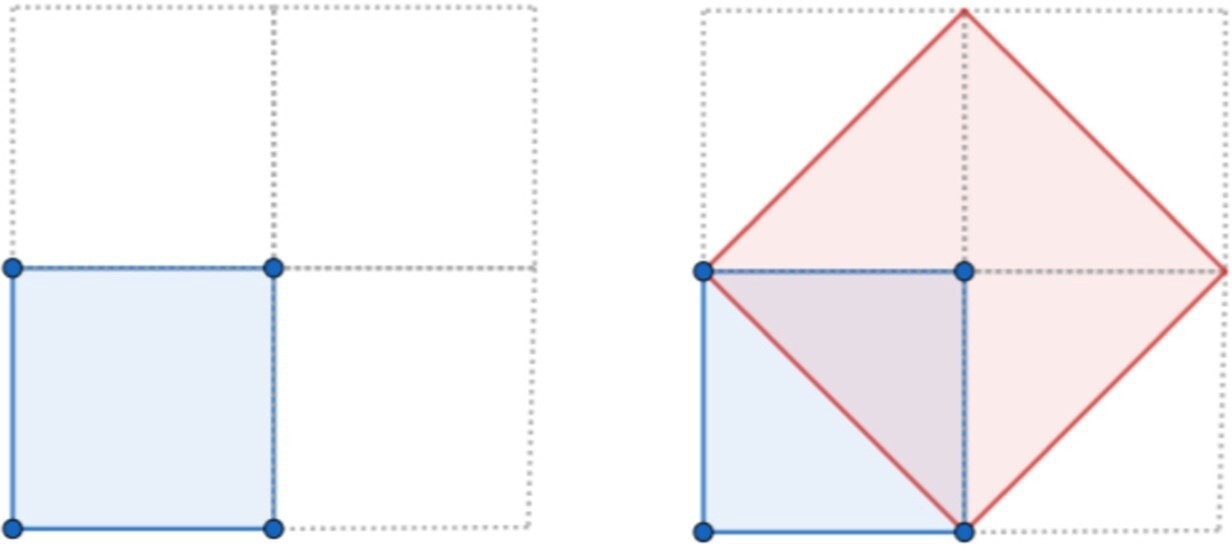

Doubling the Square problem: Given a square, find another square twice the area.

The Study

The new study, published in the International Journal of Mathematical Education in Science and Technology, explored a similar question about ChatGPT's mathematical 'knowledge' as perceived by its users. The researchers wanted to know whether it would solve Plato's problem using knowledge it already 'held', or by adaptively developing its own solutions.

Plato describes Socrates teaching an uneducated boy how to double the area of a square. At first, the boy mistakenly suggests doubling the length of each side, but Socrates eventually leads him to understand that the new square's sides should be the same length as the diagonal of the original.

The researchers presented this problem to ChatGPT-4, first imitating Socrates' questions and then deliberately introducing errors, queries, and new variants of the problem.

Unexpected Responses

Like other large language models (LLMs), ChatGPT is trained on vast collections of text and generates responses by predicting sequences of words that it has learned during its training. The researchers expected it to handle the Ancient Greek maths challenge by regurgitating its pre-existing knowledge of Socrates' famous solution.

Instead, however, it seemed to improvise its approach and, at one point, also made a distinctly human-like error.

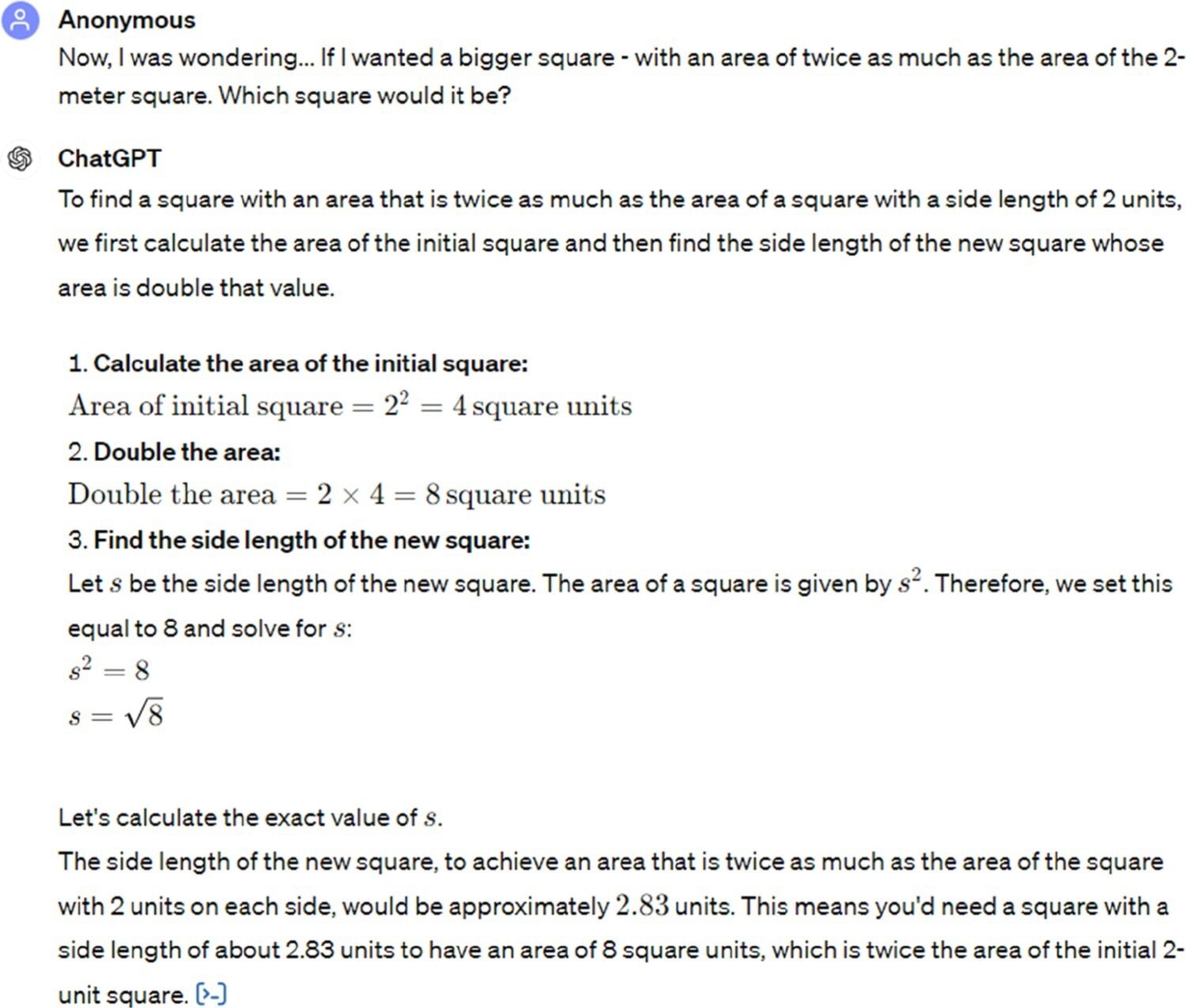

ChatGPT’s original answer for the Doubling the Square problem.

Research Team

The study was conducted by Dr. Nadav Marco, a visiting scholar at the University of Cambridge, and Andreas Stylianides, Professor of Mathematics Education at the University of Cambridge. Marco is permanently based at the Hebrew University and the David Yellin College of Education in Jerusalem.

Behaviour Characterized as Learner-Like

While they are cautious about the results, stressing that LLMs do not think like humans or 'work things out', Marco did characterise ChatGPT's behaviour as "learner-like".

"When we face a new problem, our instinct is often to try things out based on our past experience," Marco said. "In our experiment, ChatGPT seemed to do something similar. Like a learner or scholar, it appeared to come up with its own hypotheses and solutions."

Geometry vs Algebra

Because ChatGPT is trained on text and not diagrams, it tends to be weaker at the sort of geometrical reasoning that Socrates used in the doubling the square problem. Despite this, Plato's text is so well known that the researchers expected the chatbot to recognise their questions and reproduce Socrates' solution.

Intriguingly, it failed to do so. Asked to double the square, ChatGPT opted for an algebraic approach that would have been unknown in Plato's time.

It then resisted attempts to have it make the boy's mistake and stubbornly stuck to algebra, even when the researchers complained about its answer being an approximation. Only when Marco and Stylianides told it they were disappointed that, for all its training, it could not provide an "elegant and exact" answer, did the chatbot produce the geometrical alternative.

Despite this, ChatGPT demonstrated a comprehensive understanding of Plato's work when asked about it. "If it had only been recalling from memory, it would almost certainly have referenced the classical solution of building a new square on the original square's diagonal straight away," Stylianides said. "Instead, it seemed to take its own approach."

Variants of the Problem

The researchers also posed a variant of Plato's problem, asking ChatGPT to double the area of a rectangle while retaining its proportions. Even though it was now aware of their preference for geometry, the chatbot stubbornly stuck to algebra.

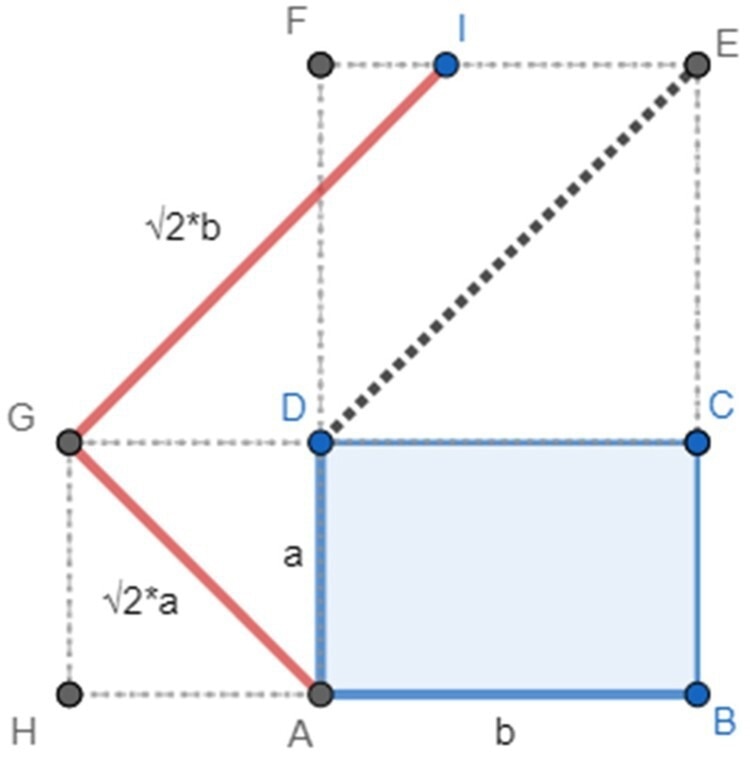

When pressed, it then mistakenly claimed that, because the diagonal of a rectangle cannot be used to double its size, a geometrical solution was unavailable. The point about the diagonal is true, but a different geometrical solution does exist.

Marco suggested that the chance that this false claim originated from the chatbot's knowledge base was "vanishingly small." Instead, the chatbot appeared to be improvising its responses based on their previous discussion about the square.

Finally, Marco and Stylianides asked it to double the size of a triangle. The chatbot reverted to algebra yet again, but after more prompting, it came up with a correct geometrical answer.

A general geometrical solution for doubling the area of a rectangle: A Proof Without Words. Note. Given the rectangle ABCD with dimension aXb (blue), construct two squares on each side (dashed lines). The diagonals of these two squares are of the desired length for constructing a rectangle whose area is twice the area (red segments).

Educational Implications

The researchers emphasize the importance of not over-interpreting these results, as they were unable to observe the chatbot's coding scientifically. From the perspective of their digital experience as users, however, what emerged at that surface level was a blend of data retrieval and on-the-fly reasoning.

They liken this behaviour to the educational concept of a "zone of proximal development" (ZPD)—the gap between what a learner already knows and what they might eventually know with support and guidance. Perhaps, they argue, generative AI has a metaphorical "Chat's ZPD": in some cases, it will not be able to solve problems immediately but could do so with prompting.

The authors suggest that working with the chatbot in its ZPD can help turn its limitations into opportunities for learning. By prompting, questioning, and testing its responses, students will not only navigate the chatbot's boundaries but also develop the critical skills of proof evaluation and reasoning that lie at the heart of mathematical thinking.

"Unlike proofs found in reputable textbooks, students cannot assume that ChatGPT's proofs are valid. Understanding and evaluating AI-generated proofs are emerging as key skills that need to be embedded in the mathematics curriculum," Stylianides said.

"These are core skills we want students to master, but it means using prompts like, 'I want us to explore this problem together,' not, 'Tell me the answer,'" Marco added.

Source:

Journal reference: