A cross-disciplinary team shows that today’s leading image generators can dazzle with aesthetics yet still misunderstand basic prompts, exposing fundamental gaps between language and visual reasoning.

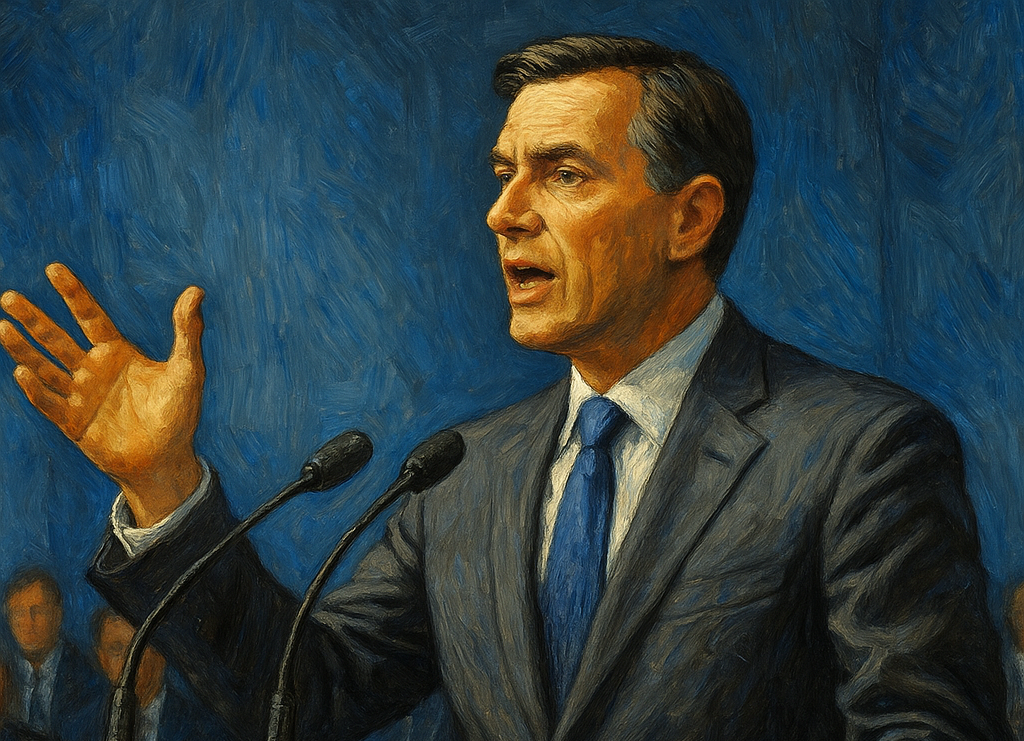

CEO giving a speech generated via DALL·E

A team of scientists has examined the capabilities of Midjourney and DALL·E, two Generative Artificial Intelligence (GAI) systems, to produce images from simple sentences. The verdict is mixed: despite impressive aesthetic output, the models continue to make elementary interpretive errors.

How do GAIs translate words into images?

Since the emergence of GAI tools like Midjourney and DALL·E, generating images from natural language prompts has become both intuitive and widely accessible. Yet the underlying question remains: how do these systems convert verbal descriptions into visual form? Four researchers from the University of Liège, the University of Lorraine, and EHESS conducted an interdisciplinary study that combined semiotics, computer science, and art history to explore this challenge.

"Our approach is based on a series of rigorous tests," explains Maria Giulia Dondero, semiotician at the University of Liège. "We submitted very specific requests to these two AI systems and analysed the images produced using criteria from the humanities, such as the arrangement of shapes, colours, gazes, the dynamism of the still image, and its rhythmic deployment." The results show that while GAIs can produce visually appealing images, they frequently fail to execute even straightforward instructions.

Where the models struggle

The study highlights recurrent limitations: difficulties interpreting negation (“a dog without a tail” often yields a dog with a hidden or partially obscured tail), challenges with spatial relations, improper placement of elements, and inconsistencies in representing perspective or gaze direction (“two women behind a door”). Simple actions such as “fighting” are sometimes rendered as dance scenes, and the systems often misrepresent temporal sequences (“starting to eat” or “having finished eating”).

"These GAIs allow us to reflect on our own way of seeing and representing the world," notes Enzo D'Armenio, former ULiège researcher and lead author. "They reproduce visual stereotypes from their databases, often shaped by Western imagery, exposing the limits of translating between verbal and visual language."

Repeat, validate, and analyse

The findings were validated through extensive repetition, up to fifty generations per prompt, to ensure statistical robustness. The study also revealed distinct aesthetic signatures across models. Midjourney tends to favour “aestheticised” renderings, adding embellishing textures at the expense of precise compliance with instructions, while DALL·E maintains a more neutral texture profile with tighter compositional control but greater variability in orientation or object count. Tests using the prompt “three vertical white lines on a black background” illustrate this pattern: consistent yet artefact-prone outputs for Midjourney versus fluctuating numbers and angles of lines for DALL·E.

Statistical machines shaped by their datasets

"GAIs produce the most plausible result based on their training databases and the parameters set by their designers," explains Adrien Deliège, mathematician at ULiège. "These design choices can standardise the gaze and perpetuate or redirect stereotypes." A striking example: for the prompt “CEO giving a speech,” DALL·E may predominantly generate women, whereas other systems produce mainly middle-aged white men, highlighting how dataset composition and model tuning influence the system’s “vision” of social categories.

Why the humanities matter for evaluating AI

The researchers emphasise that assessment of GAI systems must go beyond statistical accuracy and include tools from the humanities to understand cultural, symbolic, and representational biases. "AI tools are not simply automatic tools," concludes D'Armenio. "They translate our words according to their own logic, shaped by their databases and algorithms. Humanistic approaches are essential to understand and evaluate them."

Although these systems can already support creative workflows, they still fall short of reliably translating complex human ideas into coherent images.

Source:

Journal reference:

- Enzo d'Armenio, Maria Giulia Dondero, Adrien Deliège, Alessandro Sarti. For a Semiotic Approach to Generative Image AI: On Compositional Criteria. Semiotic Review, 2025, Images, ⟨10.71743/ee5nrx33⟩. ⟨hal-05128043⟩, https://hal.univ-lorraine.fr/hal-05128043v1