Discover how IBM and the University of Illinois Urbana-Champaign's innovative hybrid cloud system is tackling AI’s growing demands with quantum integration, cross-layer automation, and a vision for sustainable, scalable solutions.

Paper: Transforming the Hybrid Cloud for Emerging AI Workloads. Image Credit: Ar_TH / Shutterstock

Paper: Transforming the Hybrid Cloud for Emerging AI Workloads. Image Credit: Ar_TH / Shutterstock

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

In an article recently posted on the arXiv preprint* server, researchers from IBM and the University of Illinois Urbana-Champaign (UIUC) developed a comprehensive framework to revolutionize hybrid cloud infrastructures and manage the growing complexity of artificial intelligence (AI) workloads. They proposed innovative strategies to enhance usability, manageability, and efficiency within hybrid cloud systems, ultimately supporting advancements in AI-driven applications and scientific discovery.

Advancement in Hybrid Cloud Technology

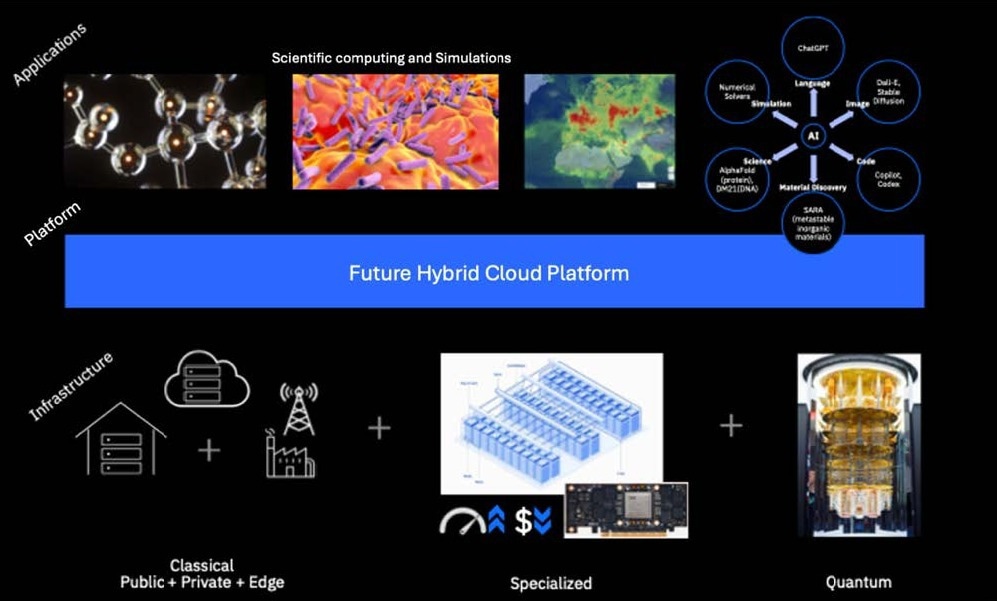

The hybrid cloud model combines on-premises data centers with public cloud services, offering flexibility, scalability, and resource optimization to accommodate diverse workloads. This approach is particularly well-suited for many tasks; however, the rise of AI, especially large language models (LLMs) and generative AI, has introduced significant challenges. These workloads demand high-performance computing resources, advanced data management, and seamless integration across heterogeneous infrastructures.

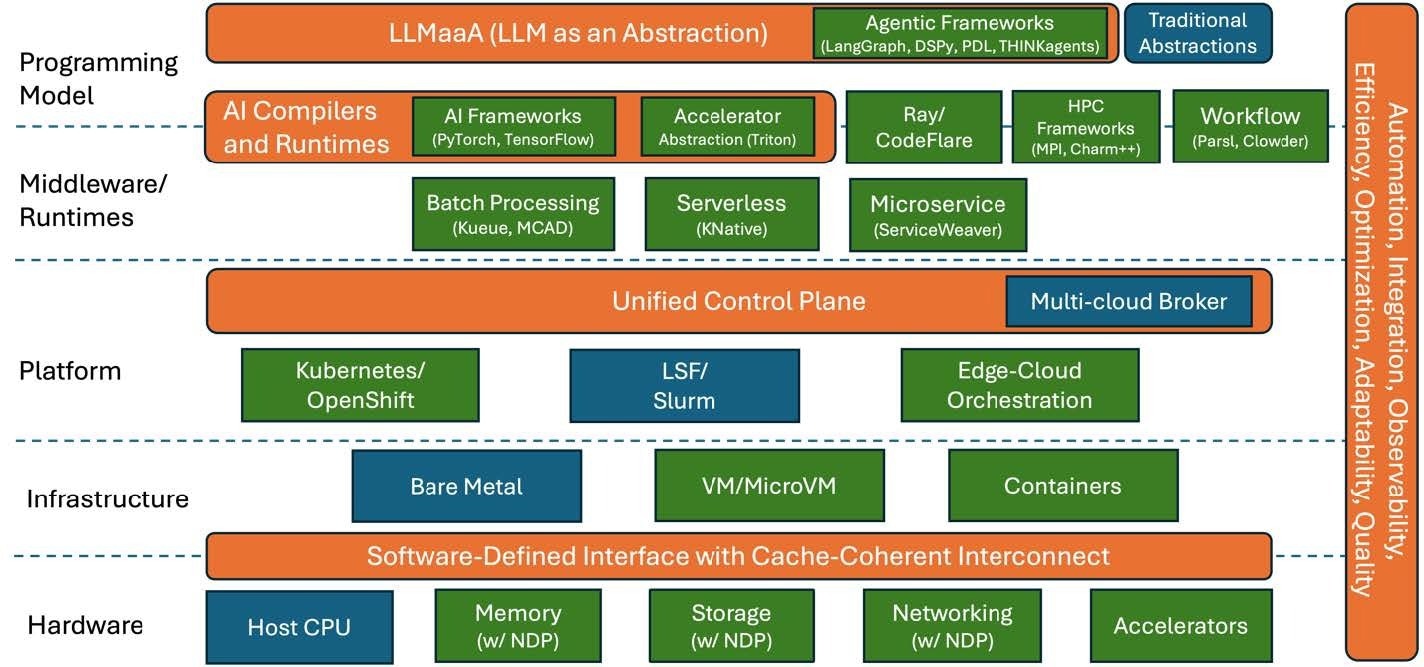

Traditional cloud systems often struggle to meet these demands due to their complexity and limited advanced programming capabilities. To address these limitations, the paper introduces the concept of "LLM as an Abstraction" (LLMaaA), a novel paradigm that simplifies user interaction with hybrid cloud systems using natural language interfaces. Integrated and sophisticated solutions are needed to address the increasing complexities associated with AI workloads.

Novel Approach for Hybrid Cloud Systems

In this paper, the authors focused on developing a novel co-design approach for hybrid cloud systems tailored to emerging AI workloads. They proposed a comprehensive framework emphasizing several key components: full-stack optimization, cross-layer automation, and the integration of quantum computing as it matures. The goal was to create a hybrid cloud environment that not only supports various AI frameworks and tools but also enhances energy efficiency, performance, and cost-effectiveness.

Key innovations include THINKagents, an AI-agentic system designed to enable intelligent collaboration between computational agents, and advanced technologies such as Compute Express Link (CXL) and GPUDirect for enhanced data transfer efficiency. By addressing critical challenges such as energy consumption, system performance, and user accessibility, the study aimed to establish a robust foundation for future AI applications.

Hybrid Cloud Platform of the Future.

Methodologies

The study employed a comprehensive approach to investigate the complexities of hybrid cloud systems, highlighting key research priorities. These included AI model optimization, end-to-end edge-cloud transformation, cross-layer automation, and application-adaptive systems. AI model optimization focused on developing unified abstractions across diverse infrastructures to enable efficient training and inference of AI models.

End-to-end edge-cloud transformation aimed to seamlessly integrate edge computing with cloud resources to support real-time AI applications. Cross-layer automation focused on intelligent resource allocation and scheduling to enhance performance and minimize operational complexity. Lastly, the application-adaptive systems were designed to create cloud architectures that dynamically adjust to varying workload demands for optimal resource utilization. The researchers combined theoretical analysis and practical experiments to validate their framework using advanced simulation tools and hybrid prototypes.

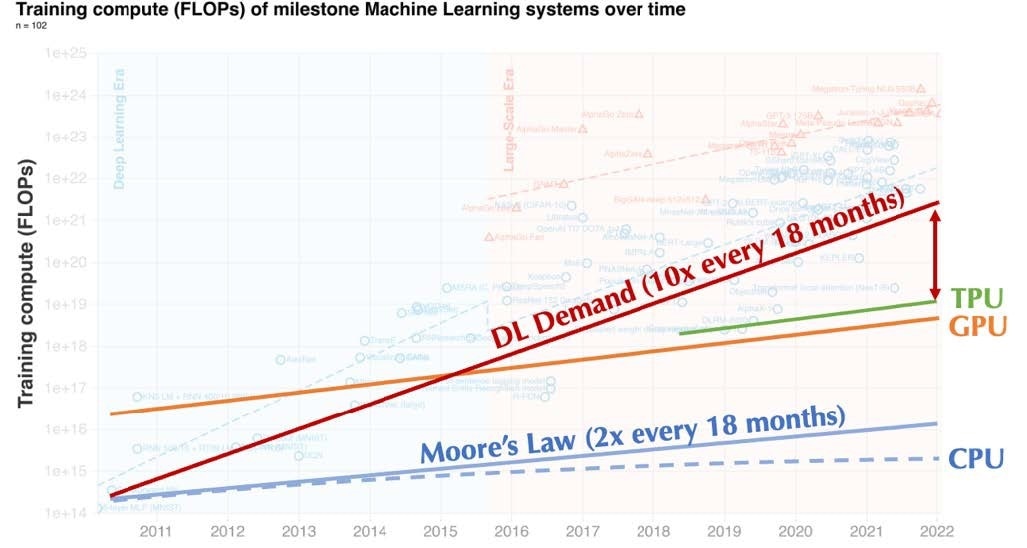

Trends in training compute demand of ML systems, showing the emergence of a new trend of large-scale models, contrasted to CPU, GPU, and TPU compute trends.

Key Findings and Insights

The outcomes showed several key insights into optimizing hybrid cloud infrastructures for AI workloads. Notably, the proposed framework demonstrated the potential to improve performance and energy efficiency by a factor of 100 to 1,000 per watt, as claimed in their theoretical models and early experimental results. This improvement is crucial as AI models continue to expand in size and complexity, requiring more robust computing resources.

Additionally, the authors emphasized the importance of integrating quantum computing into hybrid cloud systems. As quantum technologies progress, they are expected to offer significant advantages in areas such as materials science and climate modeling. Initial demonstrations showcased how quantum algorithms, when integrated into classical workflows, could accelerate simulations and improve accuracy in these domains.

The study also highlighted the need for new programming models to simplify user interactions with complex AI systems. The introduction of LLMaaA enables intuitive interaction, allowing users to deploy and manage sophisticated AI models without requiring specialized technical expertise. Transforming the Hybrid Cloud for the future. Orange bars represent new features; green boxes represent enhanced components to work with the newly added features; blue boxes are existing components.

Transforming the Hybrid Cloud for the future. Orange bars represent new features; green boxes represent enhanced components to work with the newly added features; blue boxes are existing components.

Applications

This research has significant implications for scientific computing and environmental sustainability. The designed framework could transform materials discovery by enabling AI-driven simulations to identify new materials more efficiently with desirable properties. In climate science, integrating AI and quantum computing could enhance predictive models, supporting better strategies for climate mitigation and adaptation.

Furthermore, the study paves the way for creating scalable multimodal data processing systems capable of handling diverse datasets in real-time applications. These advancements provide industries with the tools to leverage AI’s transformative potential while addressing challenges like energy consumption and system complexity. Additionally, the framework's modularity allows it to extend to other domains, such as personalized healthcare and autonomous systems.

Conclusion and Future Directions

In summary, this white paper presented a detailed vision for transforming hybrid cloud systems to effectively support advanced AI workloads and scientific applications. It emphasized the integration of technologies such as LLMs, foundation models, and quantum computing to improve performance, scalability, and efficiency in tackling complex challenges across various fields.

By prioritizing full-stack optimization, cross-layer automation, and incorporating quantum computing, the authors proposed a foundation for the next generation of cloud infrastructures focused on usability, efficiency, and adaptability. Future efforts should focus on refining these systems through real-world deployment and collaboration with broader scientific and industrial communities, ensuring that the technology evolves to meet emerging challenges.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Preliminary scientific report. Chen, D., & et al. Transforming the Hybrid Cloud for Emerging AI Workloads. arXiv, 2024, 2411, 13239. DOI: 10.48550/arXiv.2411.13239, https://arxiv.org/abs/2411.13239