DreamConnect merges brain decoding with language-guided image editing, allowing users to modify imagined scenes directly from brain activity, a breakthrough at the frontier of human–AI interaction.

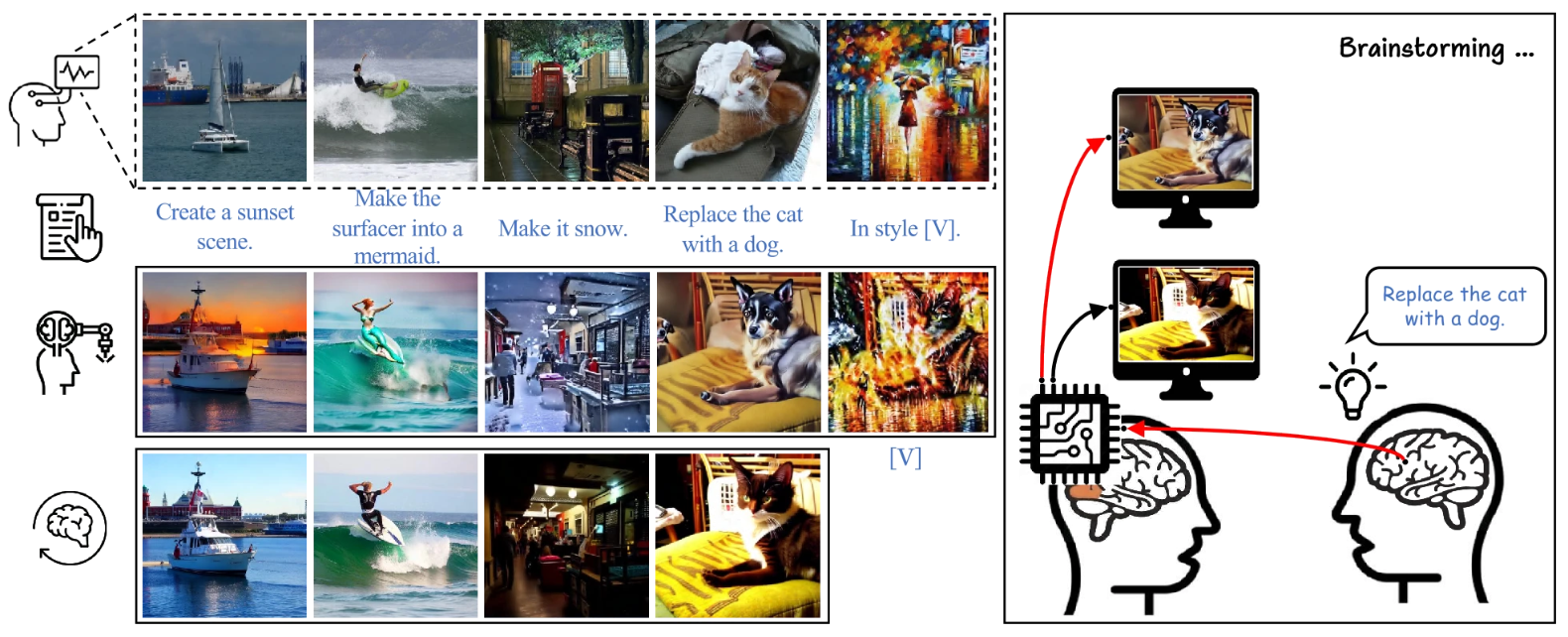

Overview of the framework pipeline. Can dreams be connected and actively influenced in future applications? As shown in the figure, DreamConnect performs the desired operation on the visual content. For example, suppose that someone imagines a lake view (see the first row) and another considers changing it to a sunset scene (the second row). In that case, our system faithfully generates the desired sunset ambiance (the third row). indicates the style source. The images are sourced from Microsoft COCO

Decoding human thoughts from brain signals has long been a scientific aspiration, with recent progress in machine learning enabling partial reconstruction of images from functional magnetic resonance imaging (fMRI) recordings. However, existing methods primarily aim to recover what a person is seeing or imagining, without providing ways to interactively modify these mental images. Bridging the gap between brain activity and language remains a fundamental challenge, as brain signals are abstract and often ambiguous. Moreover, successful communication requires selectively altering only the relevant features while preserving others. Based on these challenges, researchers sought to develop a system that could not only decode brain signals but also reshape them through guided instructions.

Introducing DreamConnect

Researchers from the Tokyo Institute of Technology, Shanghai Jiao Tong University, KAUST, Nankai University, and MBZUAI have unveiled DreamConnect. This brain-to-image system integrates fMRI with advanced diffusion models. Published in the journal Visual Intelligence, the study titled “Connecting Dreams with Visual Brainstorming Instruction” introduces a dual-stream framework capable of interpreting brain activity and editing it with natural language prompts. The innovation demonstrates how artificial intelligence can progressively refine image reconstructions derived from brain signals toward user-desired outcomes, marking an important step in connecting human "dreams" with interactive visualization.

How DreamConnect Works

DreamConnect introduces a dual-stream diffusion framework designed explicitly for fMRI signal manipulation. The first stream interprets brain signals into rough visual content, while the second refines these images in line with natural language instructions. To ensure accurate synchronization, the team developed an asynchronous diffusion strategy that allows the interpretation stream to establish semantic outlines before the instruction stream applies edits. For instance, when a participant imagines a horse and requests it to become a unicorn, the system identifies relevant visual features and transforms them accordingly.

Precision Through Language and Region Awareness

To further enhance precision, the framework employs large language models (LLMs), pinpointing the spatial regions relevant to instructions. This enables region-aware editing that avoids altering unrelated content. The researchers validated DreamConnect using the Natural Scenes Dataset (NSD), one of the largest fMRI collections. They augmented it with synthetic instruction–image triplets using AI tools such as ChatGPT and InstructDiffusion to enable supervised training in the absence of real-world paired datasets.

Performance and Results

Experimental results showed that DreamConnect not only reconstructs images with fidelity comparable to state-of-the-art models but also outperforms leading systems in instruction-based editing tasks, particularly in CLIP-D and DINO-V2 similarity metrics. Unlike other systems that require intermediate reconstructions, which are then edited using separate pipelines, DreamConnect operates directly on latent representations derived from brain signals, streamlining the process of concept manipulation and visual imagination.

A Paradigm Shift in Brain–AI Interaction

"DreamConnect represents a fundamental shift in how we interact with brain signals," said Deng-Ping Fan, senior author of the study. "Rather than passively reconstructing what the brain perceives, we can now actively influence and reshape those perceptions using language. This opens up extraordinary opportunities in fields ranging from creative design to therapeutic applications. Of course, we must also remain mindful of ethical implications, ensuring privacy and responsible use as such technologies advance. But the proof-of-concept is a powerful demonstration of what is possible."

Potential Applications

The potential applications of DreamConnect are wide-ranging. In the creative industries, designers can directly translate and refine mental concepts into visual outputs, thereby accelerating brainstorming and prototyping. In healthcare, patients struggling with communication might use the system to express thoughts or emotions visually, offering new tools for therapy and diagnosis. Educational platforms may one day harness the technology to explore imagination-driven learning. However, the authors caution that ethical safeguards are essential, given the risks of misuse and privacy invasion. The authors propose technical safeguards, including authentication protocols, watermarking, and regulated usage monitoring, to ensure the responsible deployment of these technologies. With further development, DreamConnect could become a cornerstone of future human–AI collaboration, where imagination and technology merge seamlessly.

Current Limitations and Future Directions

The authors also note that current limitations include the dependence on fMRI data, challenges in adding small objects during editing, and the assumption that visual stimuli directly reflect internally generated "dreams." They plan to explore EEG signals and more complex interaction strategies, such as multi-turn dialogue, in future work.

Funding Information

This study was supported by the National Natural Science Foundation of China (No. 62476143).

Source:

Journal reference: