With a decaying probability, RAR randomly permutes the input sequence during autoregressive training, enhancing bidirectional context modeling. The approach improved the model’s performance, reaching a Fréchet inception distance (FID) score of 1.48 on the ImageNet-256 benchmark. RAR outperformed previous autoregressive, diffusion-based, and masked transformer-based methods.

Background

Past work on autoregressive language modeling has enabled the development of general-purpose AI systems, leveraging a next-token prediction approach for scalability and zero-shot generalization. Research shifted from pixel sequences to discrete-valued tokens in autoregressive visual modeling, improving performance.

However, these models typically used raster-scan order, limiting bidirectional context learning. In contrast, this work explores random factorization orders with annealing to significantly improve autoregressive image generation.

Randomized Autoregressive Context Modeling

Autoregressive modeling with a next-token prediction objective aims to maximize the likelihood of a discrete token sequence by predicting each token based on the preceding ones, forming a unidirectional dependency. This approach contrasts with bidirectional methods like masked transformers and diffusion models, which leverage context from both directions. While autoregressive models work well for language data, the challenge with images is the absence of a predefined order for processing tokens. The raster scan order is commonly used but introduces a unidirectional bias.

The proposed RAR approach optimizes bidirectional context within the autoregressive framework. Instead of using a fixed raster scan, RAR trains the model across all possible factorization orders, allowing the model to learn relationships between tokens in every direction. The approach is based on a permuted objective, where the model is trained using random permutations of the token sequence, maximizing bidirectional context while maintaining autoregressive properties. This method helps the model capture global dependencies and improves visual generation performance.

The work also addresses the challenge of token prediction in randomized autoregressive training by introducing target-aware positional embeddings. These embeddings guide the model in correctly predicting the next token in a permuted sequence, solving the potential confusion caused by the random order of tokens. By associating positional embeddings with the next token in the sequence, the model becomes aware of the specific token being predicted, improving training accuracy. The target-aware embeddings are designed to be merged with the original positional embeddings after training, ensuring minimal impact during inference.

Finally, the analysts propose randomness annealing to balance the randomness introduced by permuted training with the effectiveness of the raster order. This strategy adjusts the probability of using random permutations over the course of training, starting with complete randomness and gradually shifting to the raster order. The randomness annealing schedule allows the model to explore different permutations initially for better bidirectional context learning and then converge to the raster order for optimal visual generation. This approach enhances performance while preserving compatibility with traditional autoregressive methods.

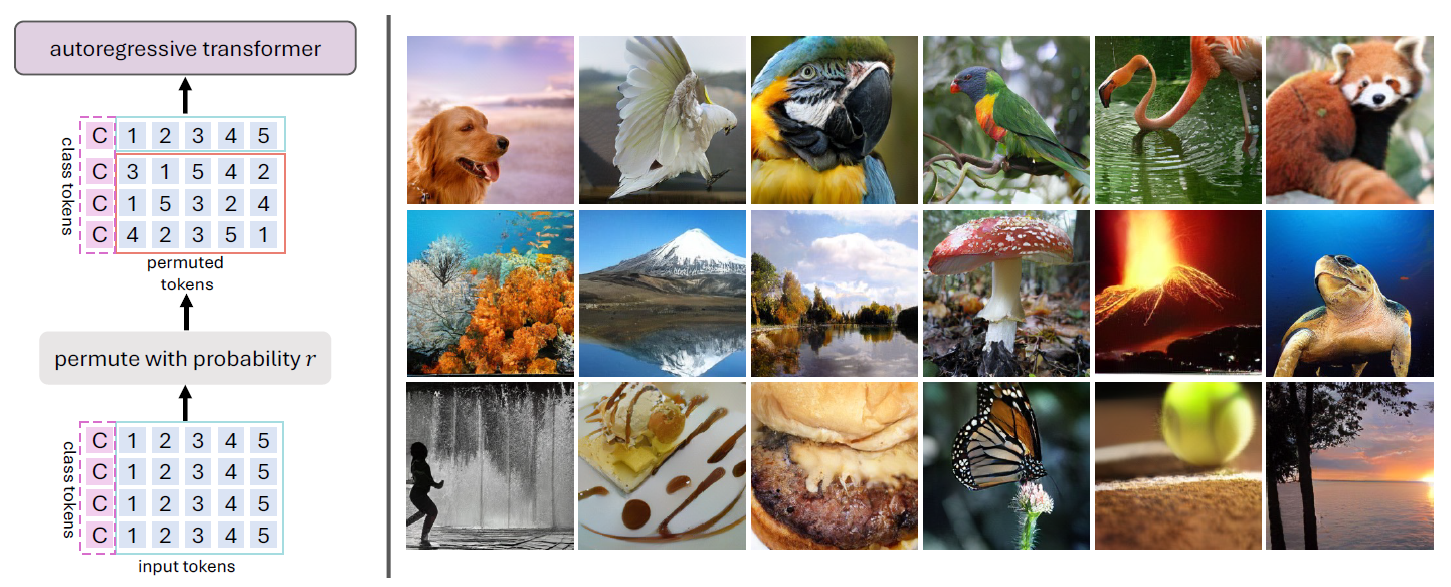

Overview of the proposed Randomized AutoRegressive (RAR) model, which is fully compatible with language modeling frameworks. Left: RAR introduces a randomness annealing training strategy to enhance the model’s ability to learn bidirectional contexts. During training, the input sequence is randomly permuted with a probability r, which starts at 1 (fully random permutations) and linearly decreases to 0, transitioning the model to a fixed scan order, such as raster scan, by the end of training. Right: Randomly selected images generated by RAR, trained on ImageNet.

Bidirectional Autoregressive Modeling Approach

The method section explains autoregressive modeling with a next-token prediction objective. The goal is to maximize the likelihood of a discrete token sequence by predicting each token based on the preceding ones, forming a unidirectional dependency. This approach contrasts with bidirectional methods like masked transformers and diffusion models, which leverage context from both directions.

While autoregressive models are adequate for language data, they face challenges with images due to the lack of a predefined order for processing tokens. The raster scan order, commonly used in image processing, introduces a unidirectional bias.

The proposed RAR approach optimizes bidirectional context within the autoregressive framework. Rather than relying on a fixed raster scan, RAR trains the model using random permutations of the token sequence, allowing it to learn relationships between tokens in all directions. This permuted objective maximizes bidirectional context while maintaining the autoregressive properties, helping the model capture global dependencies and improving visual generation performance.

Target-aware positional embeddings are introduced to address challenges in token prediction during randomized autoregressive training. These embeddings guide the model in correctly predicting the next token in a permuted sequence, eliminating confusion caused by the random order of tokens. Additionally, randomness annealing is proposed to balance the randomness of training with the effectiveness of the raster order. This strategy gradually shifts from random permutations to the raster order during training, enabling the model to explore diverse permutations for bidirectional learning and optimize visual generation performance.

Visualization samples from RAR. RAR is capable of generating high-fidelity image samples with great diversity.

Conclusion

To sum up, the paper introduced a simple yet effective strategy to improve the visual generation quality of autoregressive image generators. By using a randomized permutation objective, the approach enhanced bidirectional context learning while maintaining the autoregressive structure.

The proposed RAR model outperformed previous autoregressive models and leading non-autoregressive transformers and diffusion models. This research aimed to advance autoregressive transformers toward a unified visual understanding and generation framework.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Journal reference:

- Preliminary scientific report.

Yu, Q., et al. (2024). Randomized Autoregressive Visual Generation. ArXiv. DOI: 10.48550/arXiv.2411.00776, https://arxiv.org/abs/2411.00776