A breakthrough wearable brain-computer interface combines EEG decoding with an AI co-pilot, allowing people, including those with paralysis, to control robotic arms and computer cursors with greater speed, accuracy, and independence.

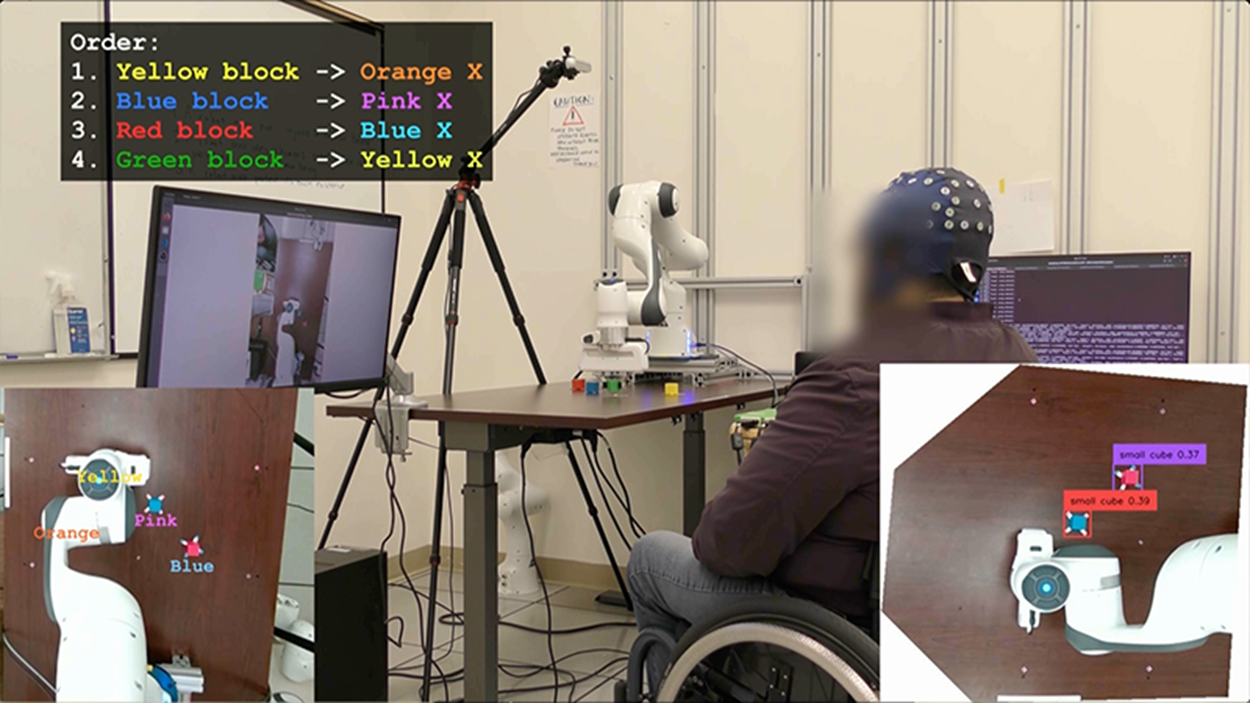

Neural Engineering and Computation Lab/UCLA, Using the AI-BCI system, a participant successfully completed the “pick-and-place” task moving four blocks with the assistance of AI and a robotic arm.

UCLA engineers have developed a wearable, noninvasive brain-computer interface system that utilizes artificial intelligence as a co-pilot to help infer user intent and complete tasks by moving a robotic arm or a computer cursor.

Published in the journal Nature Machine Intelligence, the study demonstrates that the interface achieves a new level of performance in noninvasive brain-computer interface (BCI) systems. This could lead to a range of technologies to help people with limited physical capabilities, such as those with paralysis or neurological conditions, handle and move objects more easily and precisely.

How the System Works

The team developed custom algorithms to decode electroencephalography, or EEG, a method of recording the brain's electrical activity, and extract signals that reflect movement intentions. They paired the decoded signals with a camera-based artificial intelligence platform that interprets user direction and intent in real time. The system enables individuals to complete tasks significantly faster than they would without AI assistance.

"By using artificial intelligence to complement brain-computer interface systems, we're aiming for much less risky and invasive avenues," said study leader Jonathan Kao, an associate professor of electrical and computer engineering at the UCLA Samueli School of Engineering. "Ultimately, we want to develop AI-BCI systems that offer shared autonomy, allowing people with movement disorders, such as paralysis or ALS, to regain some independence for everyday tasks."

Advantages Over Invasive Devices

State-of-the-art, surgically implanted BCI devices can translate brain signals into commands, but the benefits they currently offer are outweighed by the risks and costs associated with neurosurgery to implant them. More than two decades after they were first demonstrated, such devices are still limited to small pilot clinical trials. Meanwhile, wearable and other external BCIs have demonstrated a lower level of performance in detecting brain signals reliably.

Testing and Performance Evaluation

To address these limitations, the researchers tested their new noninvasive AI-assisted BCI with four participants — three without motor impairments and a fourth who was paralyzed from the waist down. Participants wore a head cap to record EEG, and the researchers used custom decoder algorithms to translate these brain signals into movements of a computer cursor and robotic arm. Simultaneously, an AI system with a built-in camera observed the decoded movements and assisted participants in completing two tasks.

In the first task, they were instructed to move a cursor on a computer screen to hit eight targets, holding the cursor in place at each for at least half a second. In the second challenge, participants were asked to activate a robotic arm to move four blocks on a table from their original spots to designated positions.

All participants completed both tasks significantly faster with AI assistance. Notably, the paralyzed participant completed the robotic arm task in approximately six and a half minutes with AI assistance, whereas without it, he was unable to complete the task.

Interpreting Intent Through AI

The BCI deciphered electrical brain signals that encoded the participants' intended actions. Using a computer vision system, the custom-built AI inferred the users' intent — not their eye movements — to guide the cursor and position the blocks.

"Next steps for AI-BCI systems could include the development of more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp," said co-lead author Johannes Lee, a UCLA electrical and computer engineering doctoral candidate advised by Kao. "And adding in larger-scale training data could also help the AI collaborate on more complex tasks, as well as improve EEG decoding itself."

Research Team and Support

The paper's authors are all members of Kao's Neural Engineering and Computation Lab, including Sangjoon Lee, Abhishek Mishra, Xu Yan, Brandon McMahan, Brent Gaisford, Charles Kobashigawa, Mike Qu, and Chang Xie. A member of the UCLA Brain Research Institute, Kao also holds faculty appointments in the Computer Science Department and the Interdepartmental Ph.D. Program in Neuroscience.

Funding and Future Development

The research was funded by the National Institutes of Health and the Science Hub for Humanity and Artificial Intelligence, which is a collaboration between UCLA and Amazon. The UCLA Technology Development Group has applied for a patent related to the AI-BCI technology.

Source:

Journal reference:

- Lee, J. Y., Lee, S., Mishra, A., Yan, X., McMahan, B., Gaisford, B., Kobashigawa, C., Qu, M., Xie, C., & Kao, J. C. (2025). Brain–computer interface control with artificial intelligence copilots. Nature Machine Intelligence, 1-14. DOI: 10.1038/s42256-025-01090-y, https://www.nature.com/articles/s42256-025-01090-y