By letting chips handle more work with the same resources, this breakthrough FPGA design could make AI systems faster, greener, and far less power-hungry.

Research: Double Duty: FPGA Architecture to Enable Concurrent LUT and Adder Chain Usage. Image Credit: Gorodenkoff / Shutterstock

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

An innovation from Cornell University researchers reduces the energy required to power artificial intelligence (AI), a step toward minimizing the carbon footprint of data centers and AI infrastructure.

As AI systems become increasingly powerful, they also become more power-hungry, raising questions about sustainability. The research team is tackling that challenge by rethinking the hardware that powers AI, aiming to make it faster, more efficient, and less energy-intensive.

Award-Winning Research

The researchers received a Best Paper Award for their findings, which were presented at the 2025 International Conference on Field-Programmable Logic and Applications, held from September 1 to 5 in Leiden, Netherlands.

Focus on FPGAs

Their work focuses on a type of computer chip called a Field-Programmable Gate Array (FPGA). Unlike traditional chips, FPGAs can be reprogrammed for different tasks after they are manufactured. This makes them especially useful in rapidly evolving fields such as AI, cloud computing, and wireless communication.

"FPGAs are everywhere—from network cards and communication base stations to ultrasound machines, CAT scans, and even washing machines," said co-author Mohamed Abdelfattah, assistant professor at Cornell Tech. "AI is coming to all of these devices, and this architecture helps make that transition more efficient."

How FPGAs Work

Inside each FPGA chip are computing units called logic blocks. These blocks contain components that can handle different types of computing:

- Lookup Tables (LUTs): Components that conduct a wide range of logical operations depending on what the chip needs to do.

- Adder chains: Components that perform fast arithmetic operations, such as adding numbers, are essential for tasks like image recognition and natural language processing.

In conventional FPGA designs, these components are tightly linked, meaning the adder chains can only be accessed through the LUTs. This limits the chip's efficiency, especially for AI workloads that rely heavily on arithmetic operations.

The Double Duty Innovation

The research team developed "Double Duty," a new chip architecture, to address this problem. The design allows LUTs and adder chains to work independently and simultaneously within the same logic block. In other words, the chip can now do more with the exact same processing resources.

This innovation is particularly impactful for deep neural networks, AI models that mimic the human brain's processing of information. These models are often "unrolled" onto FPGAs—laid out as fixed circuits for faster, more efficient processing.

"We focused on a mode where FPGAs are actually really good at AI acceleration," said Abdelfattah. "By making a small architectural change, we make these unrolled neural networks much more efficient, playing to the strengths of FPGAs instead of treating them like generic processors."

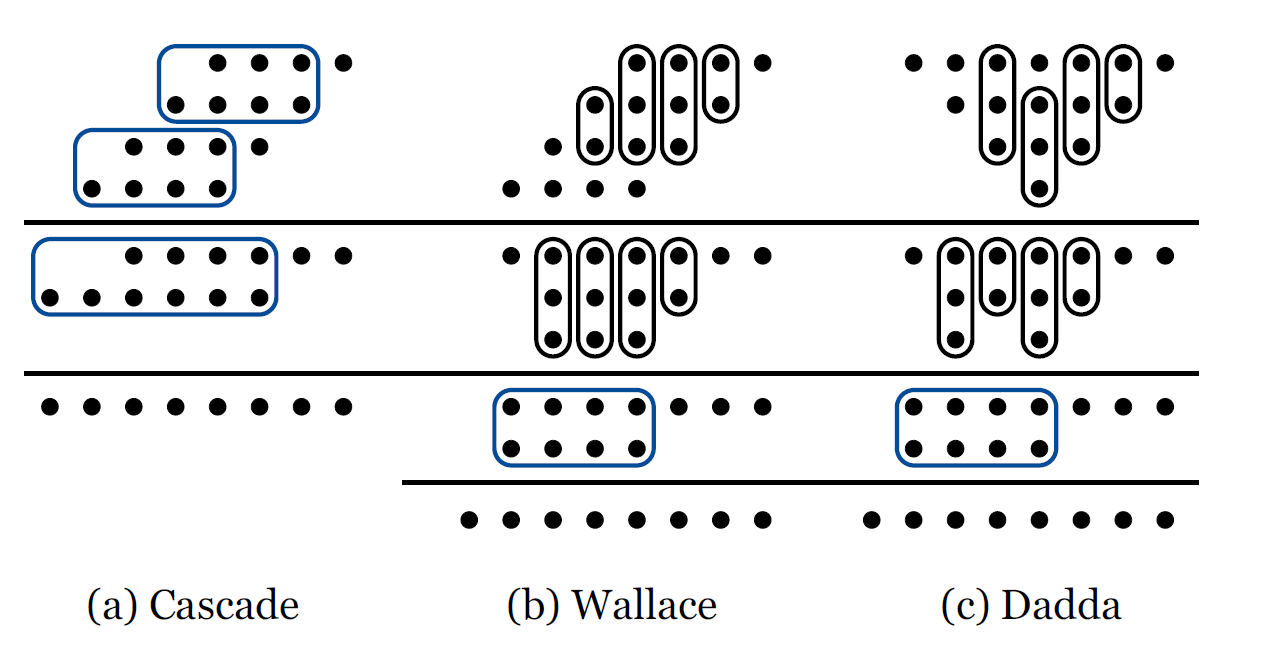

Conceptual diagram of a 4-bit multiplier using Cascade, Wallace, and Dadda algorithms. Pill shapes represent LUT-based compressors and rectangles represent full adder chains. Each layer represents a reduction stage.

Results and Impact

In testing, the Double Duty design reduced the space needed for specific AI tasks by more than 20% and improved overall performance on a large suite of circuits by nearly 10%. That means fewer chips could be used to perform the same work, resulting in lower energy use.

Further Reading

For additional details, see the Cornell Chronicle story.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

*Important notice: arXiv publishes preliminary scientific reports that are not peer-reviewed and, therefore, should not be regarded as definitive, used to guide development decisions, or treated as established information in the field of artificial intelligence research.

Source:

Journal reference:

- Preliminary scientific report.

Pun, J., Dai, X., Zgheib, G., Iyer, M. A., Boutros, A., Betz, V., & Abdelfattah, M. S. (2025). Double Duty: FPGA Architecture to Enable Concurrent LUT and Adder Chain Usage. ArXiv. https://arxiv.org/abs/2507.11709