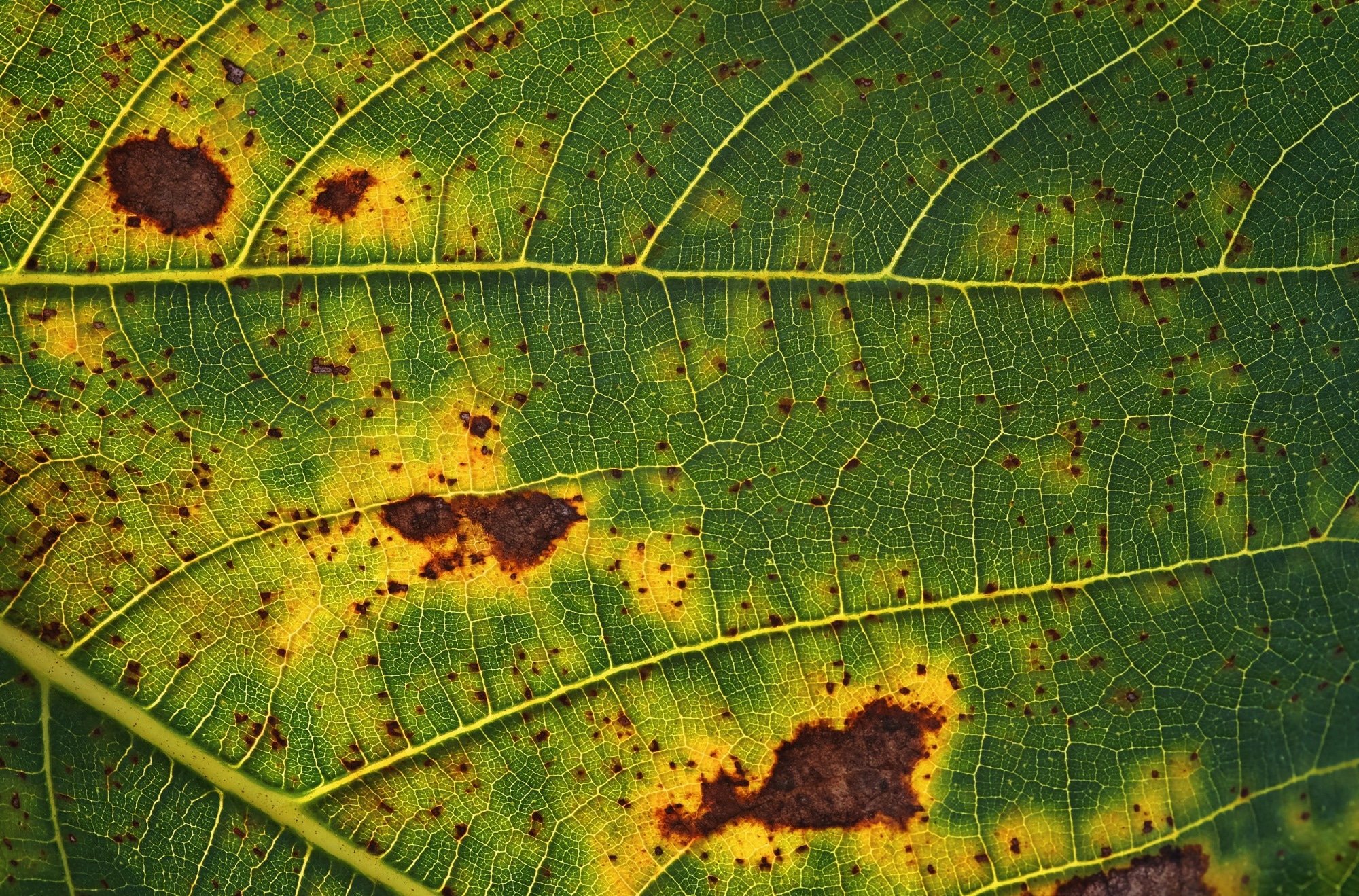

A breakthrough AI framework that isolates diseased leaf regions is setting new standards for plant disease assessment, helping farmers cut costs, reduce pesticide use, and protect global food security.

Image Credit: OMG Snap / Shutterstock

Introducing LLRL for Plant Disease Assessment

The method, called LLRL, overcomes the common challenge of background interference that often causes existing models to confuse healthy areas with lesions. By training the system to focus directly on diseased regions, the team achieved higher reliability in classifying disease severity across apple, potato, and tomato leaves.

The Challenge of Plant Diseases

Global food production must increase by 50% by 2050 to meet the growing population's needs. Yet, plant diseases already cut annual yields by 13%–22%, representing billions of dollars in agricultural losses worldwide. Traditional methods of assessing disease severity rely on human expertise or laboratory testing, both of which are costly, time-intensive, and subjective. Advances in machine learning and deep learning have enabled the automated recognition of plant diseases, often with an accuracy of over 90%. However, most models still struggle to distinguish between lesions and background features such as shadows, soil, or healthy tissue. These inaccuracies limit their effectiveness for guiding pesticide use. Based on these challenges, researchers developed a method that directly targets lesion areas, improving assessment reliability.

Publication Details

A study published in Plant Phenomics by Qi Wang's team at Guizhou University enhances the accuracy and interpretability of assessing plant leaf disease severity, enabling more precise pesticide application and advancing sustainable agricultural management.

How LLRL Works

The LLRL framework was designed to enhance the accuracy of plant leaf disease severity assessment by combining advanced network architectures with robust experimental validation. The system integrates three components: an image generation network (IG-Net), which employs a diffusion model to generate paired healthy–diseased images; a location-guided lesion representation learning network (LGR-Net), which leverages these pairs to isolate lesion areas and produce a dual-branch feature encoder (DBF-Enc) enriched with lesion-specific knowledge; and a hierarchical lesion fusion assessment network (HLFA-Net), which fuses these features to deliver precise severity classification.

To validate the method, researchers created a dataset of 12,098 images covering apple, potato, and tomato leaf diseases, supplemented by more than 10,000 generated pairs. The experiments were then implemented using Python 3.8.19 and the PyTorch 1.13.1 framework with GPU acceleration. LGR-Net was trained with the Adam optimizer, a weight decay of 1 × 10⁻⁴, and a scheduled learning rate decay across 4,000 iterations. In contrast, HLFA-Net was trained for 100 epochs at a fixed learning rate of 0.01, while sharing and freezing the DBF-Enc module.

Results and Validation

When compared against 12 benchmark models across real, generated, and mixed datasets, LLRL consistently outperformed alternatives, achieving at least 1% higher accuracy and reaching up to 92.4% with the combined use of pre-training and attention mechanisms. Visualization experiments further confirmed its ability to precisely localize lesion regions (IoU = 0.934, F1 = 0.9615), while feature maps showed progressive concentration on lesions at lower resolutions. Grad-CAM analysis revealed attention patterns that shifted toward lesions with increasing severity, aligning with established knowledge of pathology. The framework demonstrated strong generalization across crop species, with particularly robust results for tomato and potato datasets, highlighting its potential as a versatile and reliable tool for agricultural disease management.

Applications for Precision Agriculture

By enabling accurate grading of disease severity, LLRL provides a powerful foundation for precision pesticide application. Farmers could use smartphone photos of leaves to instantly assess disease progression and receive guidance on dosage and timing. At larger scales, drones and satellite imaging could integrate the system for automated monitoring across entire fields, significantly reducing manual inspection demands. This not only saves costs but also minimizes unnecessary pesticide use, reducing environmental pollution and safeguarding farmer income.

Funding

This research was supported by the National Key R&D Program of China (2024YFE0214300), Guizhou Provincial Science and Technology Projects ([2024]002, CXTD[2023]027), Guizhou Province Youth Science and Technology Talent Project ([2024]317), Guiyang Guian Science and Technology Talent Training Project ([2024] 2-15), and the Talent Introduction Program of Guizhou University under Grant No. (2021)89.

Source:

Journal reference:

- Yu, Y., Wu, X., Yu, P., Wan, Q., Dan, Y., Xiao, Y., & Wang, Q. (2025). Location-guided lesions representation learning via image generation for assessing plant leaf diseases severity. Plant Phenomics, 7(2), 100058. DOI: 10.1016/j.plaphe.2025.100058, https://www.sciencedirect.com/science/article/pii/S2643651525000640?via%3Dihub