A new topology-aware deep learning framework developed at Chiba University combines spectral, topological, and spatiotemporal EEG data to decode motor imagery with unprecedented precision, paving the way for thought-controlled prosthetics and next-generation neurorehabilitation systems.

Image Credit: DancingPhilosopher, CC BY-SA 4.0 <https://creativecommons.org/licenses/by-sa/4.0>, via Wikimedia Commons

EEG is a powerful non-invasive technique that measures and records the brain's electrical activity. By detecting the small electrical signals produced as neurons communicate, electrodes placed across the scalp capture brainwave patterns linked to different cognitive and motor processes. EEG has diverse applications in cognitive neuroscience, neurological diagnostics, and the development of robotic prosthetics and BCI systems.

Motor Imagery EEG: A Key to Brain-Computer Interaction

Distinct brain activities generate unique EEG signal patterns. One notable example is motor imagery (MI), a mental process that activates brain regions responsible for movement without any actual motion. These imagined movements produce stable, distinguishable EEG patterns, forming the foundation for MI-based BCI applications used in prosthetic control and neurorehabilitation. However, decoding MI-EEG signals remains challenging due to their low signal-to-noise ratio, non-linear behavior, and temporal variability.

Limitations of Traditional Machine Learning Approaches

Conventional decoding methods utilize machine learning algorithms to extract spatial, temporal, and spectral features, including frequency power and distribution, from EEG data. While deep learning models have recently improved decoding performance, they still struggle to capture complex dependencies among EEG features and across electrode networks.

Introducing the Topology-Aware Multiscale Feature Fusion (TA-MFF) Network

To address these challenges, Ph.D. student Chaowen Shen and Professor Akio Namiki from Chiba University’s Graduate School of Science and Engineering have developed the Topology-Aware Multiscale Feature Fusion (TA-MFF) network. Their findings were published online on September 26, 2025, and will appear in Volume 330, Part A of the journal Knowledge-Based Systems.

“Current deep learning models mainly extract spatiotemporal features from EEG signals while overlooking dependencies among spectral features,” explained Prof. Namiki. “Moreover, most methods extract only shallow topological features between electrode connections. Our approach introduces three new modules that address these limitations effectively.”

Architecture and Modules of the TA-MFF Network

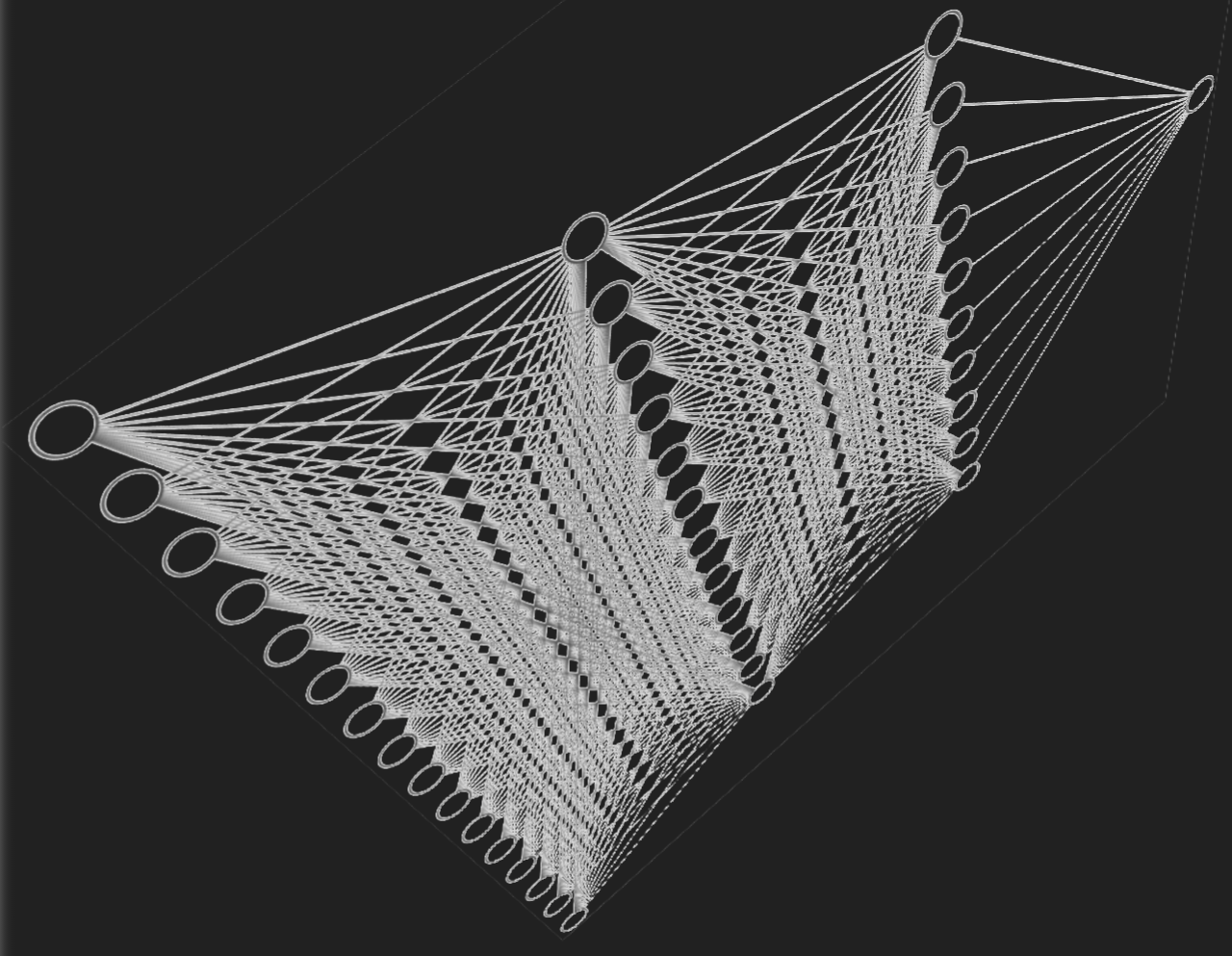

The TA-MFF network combines two main subsystems: a Spatiotemporal Network (ST-Net) and a Spectral Network (S-Net). Its architecture includes three novel components: the Spectral-Topological Data Analysis-Processing (S-TDA-P) module, the Inter-Spectral Recursive Attention (ISRA) module, and the Spectral-Topological and Spatiotemporal Feature Fusion (SS-FF) unit. The S-TDA-P and ISRA modules operate in parallel within S-Net.

Extracting and Integrating Spectral-Topological Features

Within the S-Net, EEG signals are first transformed into power spectral density representations using the Welch method, which describes how signal power is distributed across frequencies and reduces noise. The S-TDA-P module then applies persistent homology, a mathematical framework for analyzing data topology, to extract deep spectral-topological relationships among electrodes and identify enduring signal patterns. In parallel, the ISRA module models correlations among frequency bands, amplifying important spectral components while filtering redundant information.

Fusing Spectral, Topological, and Spatiotemporal Domains

The SS-FF unit integrates outputs from S-Net and ST-Net using a two-step fusion strategy. First, topological and spectral features are merged, followed by the integration of these combined features with spatiotemporal information. Unlike conventional concatenation-based approaches, this multilevel fusion captures intricate dependencies across domains, resulting in a more comprehensive representation of brain activity.

Performance and Potential Applications

Through this architecture, the TA-MFF network achieves superior classification accuracy on MI-EEG decoding benchmarks, outperforming state-of-the-art models. “Our approach holds strong potential for robust and efficient EEG-based motor imagery decoding,” said Prof. Namiki. “Understanding how the brain controls movement can help people who are immobile. This research could enable control of computers, wheelchairs, or robotic arms through thought alone, helping those with movement disabilities to live more independently.”

Advancing Brain-Computer Interfaces Through Topology-Aware AI

This breakthrough represents a significant advance in the development of accurate, resilient, and adaptive MI-EEG decoding. By integrating topological insights with deep learning, the TA-MFF framework advances toward realizing seamless brain-controlled systems, bridging neuroscience and technology to enable machines to respond naturally to human intent, thoughts, and emotions.

Source:

Journal reference: