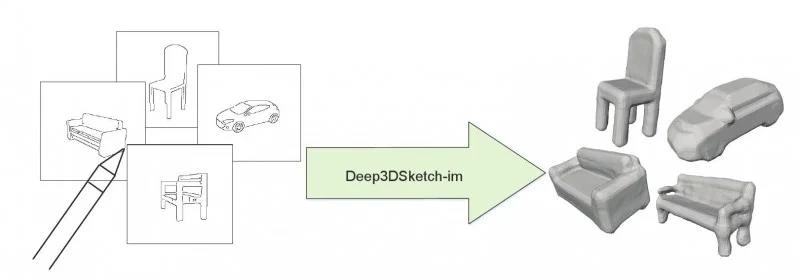

A single freehand sketch is all it takes! Deep3DSketch-im uses AI to turn abstract lines into detailed 3D models, paving the way for user-friendly design tools in everything from home interiors to manufacturing.

Pipeline of our sketch-based 3D modeling approach

Researchers from Zhejiang University published a research paper in Frontiers of Information Technology & Electronic Engineering, Volume 25, Issue 1, 2024. They proposed a sketch-based 3D modeling approach, Deep3DSketch-im, which uses a single freehand sketch for modeling.

One category of sketch-based 3D modeling approaches is interactive approaches, which are challenging for novice users; the other is end-to-end approaches, which are more straightforward but lack customizability. Deep learning methods for single-view 3D reconstruction struggle to generate high-quality 3D shapes. Single-view 3D reconstruction mainly focuses on learning 3D geometry from 2D colored images. The proposed method in the study aims to generate 3D meshes from 2D sketches, while sketches lack important information such as texture, lighting, and shading. Therefore, the study uses a deep learning based approach to learn to interpret the abstract representation of sketches and reconstruct high-quality 3D meshes, and develops a method, Deep3DSketch-im, that can accurately interpret and reconstruct 3D shapes from sparse and ambiguous sketches.

Deep3DSketch-im adopts a signed distance field (SDF) to represent 3D shapes, models the object's surface as a level set of the function, and generates high-resolution surface meshes. The network architecture includes processes of input processing, feature extraction, and output prediction. 3D models are generated by projecting each 3D query point onto the image plane and gathering multiscale convolutional neural network features. An independently trained pose-estimation network can predict the sketch viewpoint, reducing user input. A weighted loss function is used to focus the network on recovering details near and inside the iso-surface.

The study focuses on discussing the experimental results of the Deep3DSketch-im model, including performance evaluation on synthetic datasets and real hand-drawn datasets. Also, it discusses the performance of the Deep3DSketch-im model without view input. Experimental results show that the Deep3DSketch-im model performs excellently in generating high-fidelity 3D models and has a good user experience in practical applications. It also discusses how to further improve the model, such as conducting domain generalization and domain adaptation.

The proposed method has a particular application, which is rapid home interior design. The sketch can precisely define the six-dimensional (6D) pose and position of the generated object. The study demonstrates that the sketch-guided approach is more efficient and easier to use for users in manipulating the generated 3D objects within the scene compared to the "touch-based" approach. Future research can focus on expanding the application scope of this sketch-based modeling tool, including appropriate shape representations, to eventually apply to CAD/computer-aided manufacturing (CAM) and many other fields.

Source:

Journal reference: